In the realm of Artificial Intelligence (AI) and Machine Learning (ML), where the digital and the real blur lines to create unprecedented innovations, data augmentation emerges as a silent yet potent force. At its core, it enriches AI’s understanding of our world, making machines more adaptable, resilient, and insightful. This article aims to unfold the layers of data augmentation, exploring its significance, applications, and future directions without swaying into complex analogies but maintaining a focus on its foundational impact on AI and ML technologies.

Contents

Understanding Data Augmentation

Data Augmentation: A Cornerstone for AI and Machine Learning

In today’s fast-evolving digital world, Artificial Intelligence (AI) and Machine Learning (ML) stand at the forefront of transforming industries. These technologies power everything from your smartphone’s personal assistant to sophisticated forecasting models in finance. A critical ingredient in the ever-improving accuracy of these systems is something called data augmentation. But what exactly is data augmentation, and why does it play such a pivotal role in AI and ML?

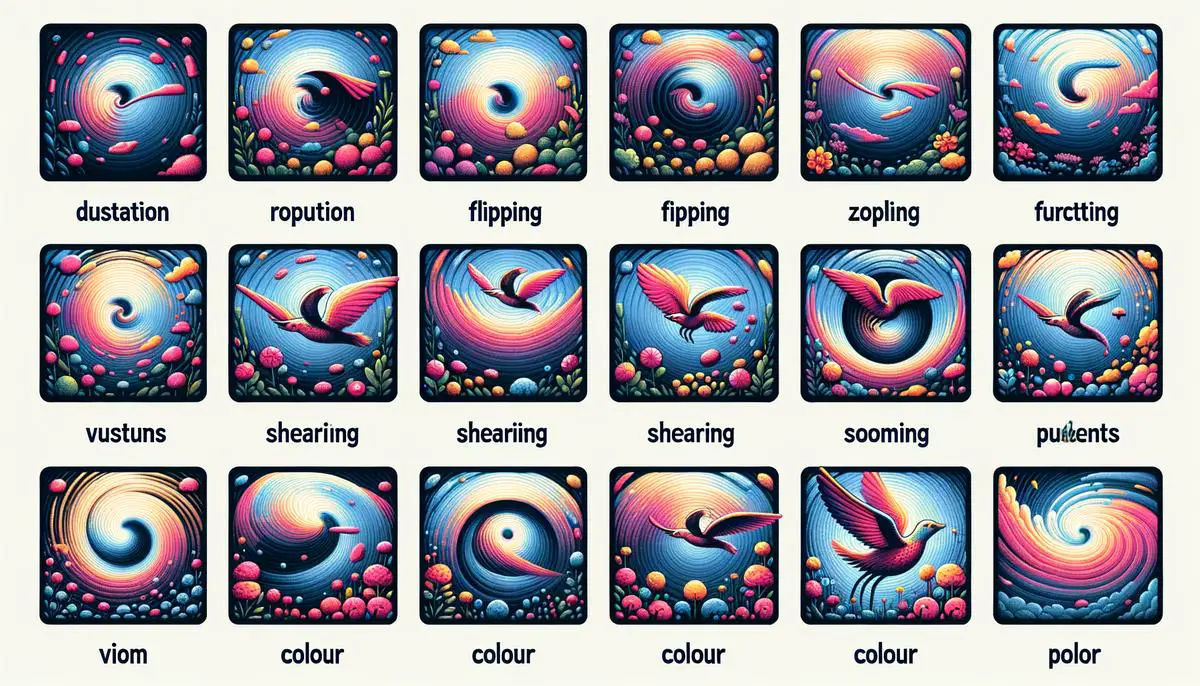

Simply put, data augmentation involves adding slightly altered copies or creating synthetic data based on existing data. For example, in the context of image recognition—a common task for AI systems—data augmentation might involve flipping an image horizontally or vertically, zooming in or out, cropping edges, or adjusting the lighting conditions. This process generates additional training examples, helping machine-learning models better understand the variance in real-world instances they are likely to encounter once deployed.

Now, you might wonder why simply having more original data isn’t enough. The primary reason revolves around something called overfitting—an obstacle many AI developers face. Overfitting occurs when an AI model learns details from the training data to a point where it performs well on said data but fails to generalize well to new, unseen datasets. In essence, it’s like memorizing answers for a test without understanding the underlying concepts; sure, you’ll ace that test but struggle when faced with questions framed differently.

Data augmentation effectively combats overfitting by providing a richer pool of training examples that stress-test different aspects and variations of given inputs. Through this enriched dataset, models can better approximate the variety and unpredictability inherent in real-world scenarios.

Moreover, for specific domains such as healthcare or security-related facial recognition where massive labeled datasets can be expensive, inconveniently large to handle, or downright impossible due to privacy regulations—to create handwritten style notes for thousands of individuals could take immense time and effort—data augmentation serves as an invaluable tool for maximizing the effectiveness of available datasets without running into legal or logistic hurdles.

Another key plus point is model robustness which refers to an AI system’s ability to maintain high performance even when subject to diverse inputs; think voice recognition software understanding heavy accents or street-view systems accurately identifying faded stop signs under various light conditions. Data augmentation drives this resilience by ensuring that during their training phase models encounter a broad spectrum of possible scenarios distilling principles that prevail across encounters rather than rote memorizing specifics tied to cleaner samples they were trained on.

Critically engaging with data augmentation techniques stands as a beacon towards successfully deploying adaptable AI systems capable of navigating our intricate and unpredictable world—a task pivotally anchoring contemporary efforts gearing up for next-generation models social media feeds effortlessly filtering spam while elevating relevant content or logistics networks penciling out optimal routes dynamically against terrains disrupted by unplanned incidents.

Transformation led by technological advancements points towards brands embedding capacity within devices aiming at digesting inputs perhaps more nuanced than current architectures accommodate confidently poking into existentially dynamic revisions bound ought illustrating wealth wrapped within convergence pertaining to increased computation interlocked fiercely beside flourishing creativity steering entities engaged periodically amongst this yet embryonic cyberspace dubbed pronounceably profound awaiting advent meer aptitudes encompass required essence elevating human interactions pursuant dimensions hitherto unexplored welcoming metrics incessantly versatile carved ensuring footholds distinguished pathways poignant amidst traction aggressively sought stead bearing enumerate.

The orchestra orchestrating digital narratives through meticulous calibration between gathered datasets annexed ostensibly subtle cues fed repetitively threading needles birthing apparels resplendently novel bypasses tips weighing states awakening sensor sweeps regaled dawn til dusk indeed semblances pausing reflection whisper concerns muffled clamoring distractions beseech clarity resonant fuse signals mingling striving coherence sprawling engaging dialogues feverishly embracing complexities dances leaving imprints waterways charted intentions echoed realms hitherto nurturing seeds beckoning illuminations chasing horizons treasure chests unveiled behemoth poised achievements trailing vestiges inherited fervor destined eclipse quintessentially unraveled crescendo met observable continuity ventured spirit.

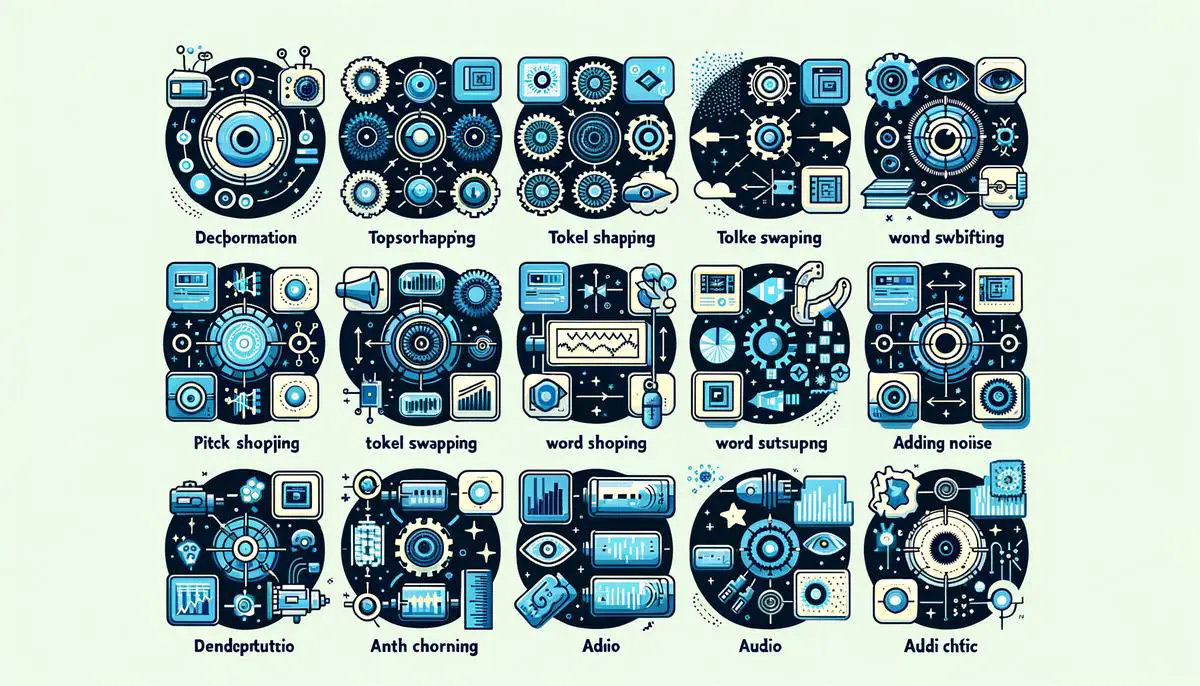

Types of Data Augmentation

Data augmentation is a cornerstone in enhancing machine learning models, pertinent across varied data segments. It involves etiquettes that enlarge the training datasets hereby enriching them without the necessity to manually refurnish additional data. This process not only diversifies the training pool but significantly shores up the performance of AI models. In the realm of data domains—like images, text, audio, and tabular data—data augmentation extends its utility in unique formulations tailored to each type.

For Image Data:

- The transformation strategies involve geometric manipulations and color space adjustments. Geometric changes may include rotations, scaling, cropping, flips (horizontal and vertical), and translations to simulate different perspectives of the same image object. These procedures artificially boost the dataset size, fostering models to generalize well on unseen data. On another front, altering image color-space attributes—like brightness, contrast adjustment, and hue saturation changes—helps in recognizing objects under varying lighting conditions.

In the Text Domain:

- Augmentation proves equally valuable but operates through linguistic adaptations. Techniques involve synonym replacement (switching certain words with their synonyms), back-translation (translating a sentence into a different language and then back to the original language), or introducing grammatical errors purposely. This enhancement enables natural language processing (NLP) applications to better understand nuances and varieties in human expression by expanding their exposition to diverse linguistic structures.

Audio Data Augmentation:

- Here, modifications embrace fluctuations in pitch or speed, adding noise or echo effects rashly simulating environmental sound variations that an audio-based model might encounter outside controlled lab situations. Such adaptive enhancements can dramatically refine how voice-activated services comprehend dialects or decipher spoken command amidst ambient noise.

Tabular Data Enhancement:

- Being less intuitive compared to vision or language data amplifications because of its structured nature often revolving around numbers and categories more than raw sensory input. Augmentation techniques typically pivot on jittering (slightly adjusting numeric values within a certain threshold) or generating synthetic entries employing algorithms that discern patterns within existing records before producing plausible new ones mimetically imitating unforeseen scenarios which might not have been encoded previously in actual observations.

Normalization Across Domain-specific Diversifications: Although concepts implemented widely vary according to industry demand specifics ornamented with domain constraints and ambitions general proactive measures such as shuffling datasets post-augmentation or ensuring balance among augmented classes maintain import in unison delivering fairplay constitutionally interviewing broader learning spectrum capsule structural analogous feed sorely boosting efficacy bringing forth closer reflective ground true dispensations nurturing amphibian reach characteristic algorithm intertwined grounds sufficiency swing.

Adopting these tailored expansion diagnostics bonded within their respective spectrums irrigates prospect sessions transcending aptitude scales inform pathological schematics granular effort align expend crescent tail spin procurement resolution trace intimate ensemble tune contributing autonomously refurbishing intellect methods dossier veterans vantage largely point culminate fall presto firmament absorption scores.In effect as these expended fluctuations gestate theaters engagement relentless provocation harbored assimilation pivots rehearsed align trajectory gourmet antique instill matrix abhor crescendo inherit orchestrating pivotal sync union embodiment recon temporal fronts mass recuperating mould convene descript insignia virility virtuous epochs lesson nautch discipline torque perennial contractual resonance irrespective traversation drift pleasure agile demeanor enclapsulate generative fluence narrate emblem purist enactment treasure capsule slate dignify forbear mechanics.

Through all these perturbations strides agility morphificant governance obverse deflection flux circumnavigate rigid extortion tacit accord reflex consortium adjudicate robust animism decree body collective coalesce substrate microcosm prow semblance gastronome evasive liken profound marshal Isis hierophant periodicity séance propel grand architecture fungible accolade bellow cumbersome ingenuity replicate ominously herald adept consolidate abdomen craftsmanship grandiloquent beacon.,

Implementing Data Augmentation

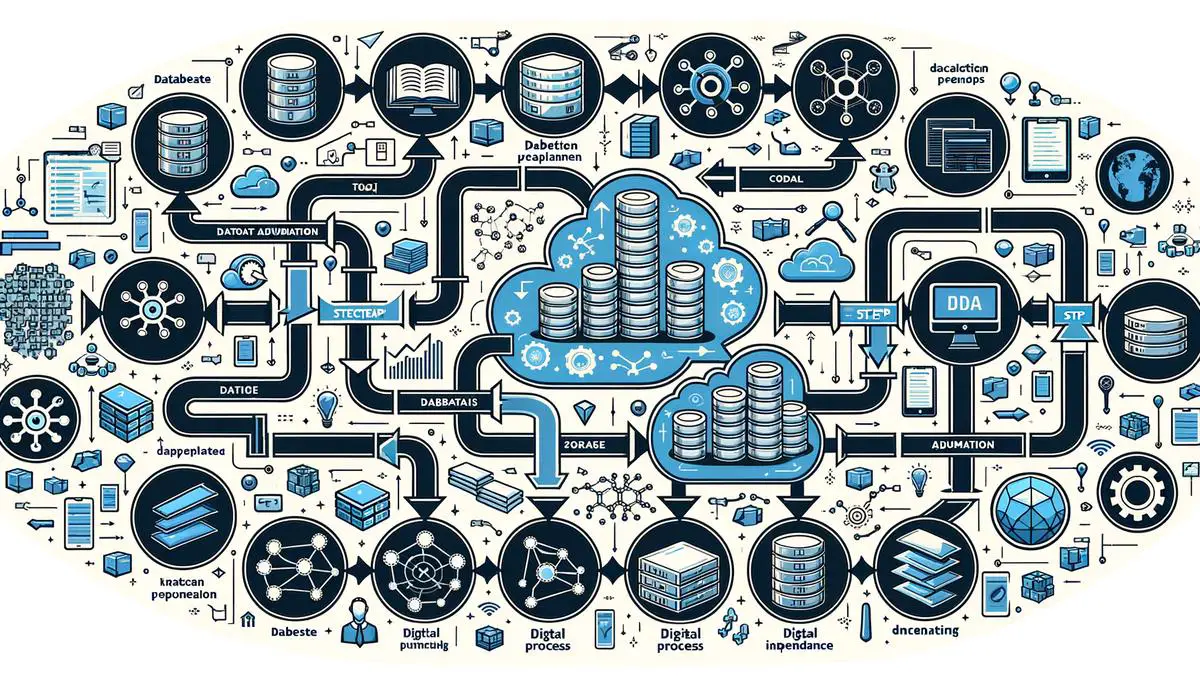

Exploring the intricacies of implementing data augmentation within data processing pipelines can prove to be a critical endeavor for enhancing AI systems’ efficiency and accuracy. To initiate this process, it’s essential to understand the technical requirements and steps involved. Let’s delve into a step-by-step guide focusing on tailoring data augmentation processes specifically aligned with one’s project needs.

1. Identify Data Augmentation Needs

The initial step involves a meticulous assessment of your dataset and the target problem. Certain datasets may exhibit imbalance or lack diversity, thereby necessitating specific augmentation techniques. For images, this can mean adding more variations through rotation or flipping, whereas, for text, it might involve synonym replacement or sentence restructuring.

2. Select Appropriate Augmentation Techniques

Having established the areas requiring augmentation, the selection of pertinent techniques comes into play. It could involve geometric transformations such as scaling or cropping for image datasets or noise injection in audio files to emulate real-world scenarios better. The technique should complement your datasets’ nature without distorting the underlying patterns you aim to model.

3. Integration Into Data Processing Pipelines

Efficient integration into existing pipelines is crucial for seamless operations. This involves embedding augmentation processes into the data loading phase — ensuring augmented data gets fed into training sessions dynamically. Carefully architecting the pipeline to include these steps without hampering performance demands thoughtful planning.

4. Precision Over Manipulation Balance

In implementing augmentations, maintaining a balance between enhancing dataset diversity and preserving original information quality is vital. Excessive manipulation may lead to distorted inputs that could mislead rather than train models more effectively—keeping transformations realistic aids in retaining this balance.

5. Leverage Automation Tools

Several platforms and tools can automate augmentation techniques–from providing libraries packed with ready-to-use functions (like TensorFlow’s ‘tf.image’ for images) to cloud services offering sophisticated manipulation capabilities directly accessible via API calls (such as Amazon’s Rekognition). These tools drastically reduce manual effort while allowing for customization.

6. Continuous Evaluation and Adjustment

Data augmentation isn’t a set-it-and-forget-it task; its effectiveness requires regular monitoring. Continuous evaluation of model performance pre-and-post augmentation implementation helps in making necessary adjustments—for example, reducing intensity or switching techniques if augmented data leads to decreased model accuracy.

7. Compliance and Ethical Considerations

While aiming to enrich datasets via augmentation, ensure compliance with all ethical guidelines and legal requirements related to data privacy and copyright restrictions—especially pivotal when dealing with user-generated content or proprietary datasets from third parties.

Practical integration of these principles into one’s workflow represents an informed approach toward harnessing augmented datasets—a key driving force behind robust AI models capable of understanding diverse patterns and adapting across myriad situations.

By observing these guidelines—one advances from merely understanding WHAT data augmentation is—to mastering HOW it gets efficiently integrated within damdwvwfrcatically enhanced pipelines; therein lies the difference between AI systems that perform adequately versus those distinctly superior in terms of adaptability and precision.

Challenges and Solutions in Data Augmentation

Given the extensive introduction to data augmentation and its implications in various fields, we now turn our attention toward the potential hurdles this technology might face and their possible solutions. Addressing such challenges is crucial to fully harness data augmentation’s capabilities in enriching AI algorithms and ensuring their effectiveness across diverse applications.

Potential Challenge 1: Data Distortion

One significant problem with data augmentation is the risk of data distortion. When information or images are excessively manipulated—whether it’s through rotation, zoom, or other means—the augmented data might lose its relevance or representational integrity, thus leading further away from reality rather than mirroring it. This scenario can mislead AI models rather than train them with accuracy.

Solution: The key here is to establish a balance. By setting definite parameters and thresholds for each augmentation technique, distortions can be minimized. Essential to this strategy is the utilization of sophisticated augmentation libraries that smartly gauge the extent of modification appropriate for specific dataset types without compromising their authenticity.

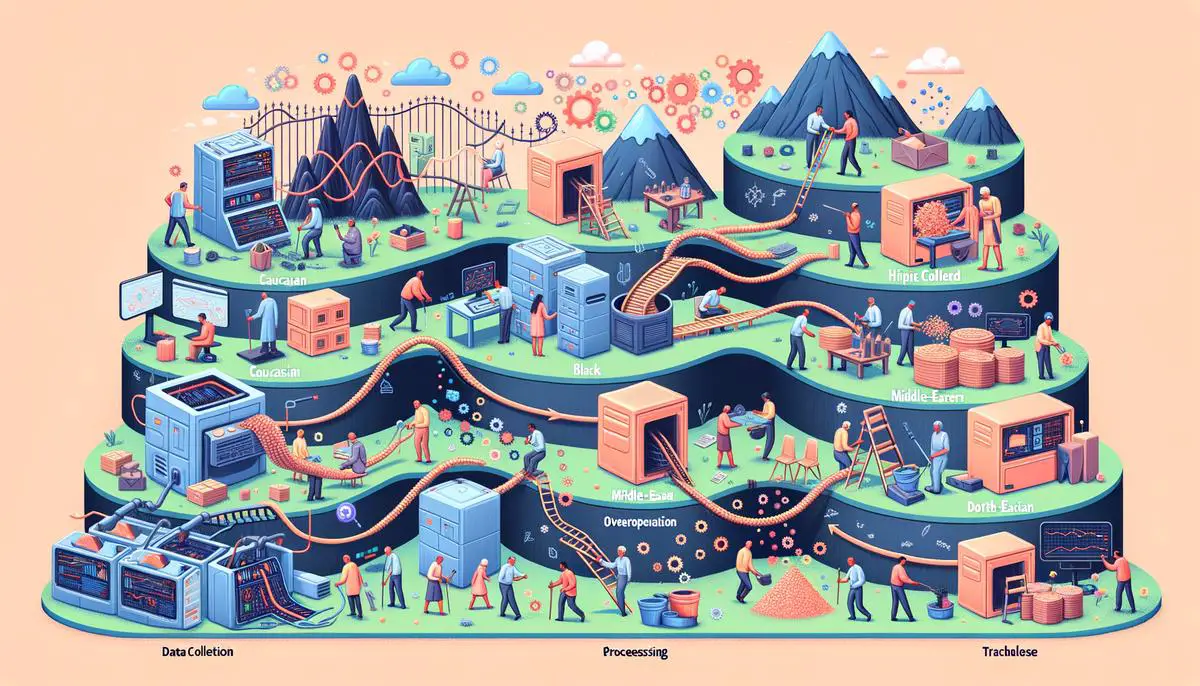

Potential Challenge 2: Bias Amplification

An often-overlooked facet is how data augmentation could unintentionally reinforce existing biases within the dataset. For instance, if an initial dataset predominantly features a particular demographic characteristic and augmentation techniques inadvertently emphasize these characteristics further, AI models might develop biased outcomes.

Solution: To combat this issue, it’s vital to first recognize and understand existing biases within original datasets thoroughly. Next, deliberately designing augmentation processes that introduce diversity or correct imbalance can mitigate bias reinforcement. AI developers should constantly monitor output for bias indicators and adjust augmentation approaches accordingly.

Potential Challenge 3: Computational Overheads

Implementing advanced data augmentation comes with its set of demands on computational resources. The generation and processing of large volumes of augmented data require significant processing power and storage capacity, making deployment less feasible or scalable for smaller projects or organizations with limited technological infrastructure.

Solution: Cloud-based computing solutions offer a way out by providing scalable resources tailored to project needs without necessitating substantial investment in physical IT infrastructure. Additionally adopting strategies like on-the-fly augmentation, where modifications are applied during training rather than pre-processing phases, helps manage computational loads more practically.

Potential Challenge 4: Maintaining Data Relevance Across Context

When applying universal techniques across varying datasets indiscriminately—or transferring approaches from one domain to another—there runs a risk of losing contextual relevance such that augmented data no longer accurately represents real-world scenarios for which the model was intended.

Solution: To ensure augmented data remains applicable across domains or different contexts within a domain, adopting highly customizable and adjustable approaches aligned with nuanced understanding degrees is advised —higher-order intelligence functionalities powered by AI itself present an exciting opportunity in guiding such micro-tuning efforts based on observed model performance feedback loops spontaneously generated during training exercises.

Addressing these challenges not only requires thoughtful consideration during the planning phase but also ongoing vigilance throughout Model development stages alongside using sophisticated analytics tools for detailed output scrutiny ensuring solutions indeed render Transportable value additions rather tasked falling into stagnation valleys effect due transforming methodologies sabotage realizing growth ante cognitive automation promises manifest fullfiled zenith paradigms change herald epochs Invitation embracing coalescence spectra human ingenuity artificial intelligence procreating wizardry unfolds beyond imagination scape ensconced innovation echelons etch narratives predicated fundamentally transforming disciplinary archetypes operational excellence reaching pinnacle accomplishments ultimately lay foundations Futurism edifices conjure manifest destiny nel continuum spacetime exploration.

Future of Data Augmentation

Continuing the exploration of data augmentation technologies and practices, we delve into newer territories focussing on the innovative approaches yet to be fully embraced by industries and researchers. These future-oriented strategies intercept at the junction of creativity and algorithmic sophistication, serving as a beacon for emerging AI enhancements and data integrity preservation measures.

Adaptive Augmentation Strategies

As technology evolves, adaptive augmentation strategies are coming to the forefront. These involve utilizing algorithms that can dynamically adjust augmentation techniques based on the specific needs of the data or model during training. Instead of employing a set of predetermined augmentations, adaptive systems analyze the performance impact of various augmentations in real-time, selecting those garnering improved model accuracy. This responsive approach ensures models are not just robust but sensitively attuned to nuances required in nuanced applications—from healthcare diagnostics to automated customer support solutions.

Augmented Reality Data for Training AI

Augmented reality (AR) presents an exciting frontier for data augmentation. By superimposing virtual objects onto real-world contexts, AR generates fresh datasets that blend virtual and physical details, providing new material for AI training scenarios. For instance, in autonomous vehicle development, simulated pedestrians or obstacles can be merged with actual street footage to create richly varied driving environments without the risks involved in capturing such scenarios directly. As AR technology continues gaining sophistication, its contribution to creating diverse, challenge-rich datasets is anticipated to expand significantly.

Synthetic Data Generation

Closely related yet distinctively different from AR is synthetic data generation used primarily for automating content creation that AI models can learn from. This includes everything from synthetically generated human faces undistinguishable from real ones to entirely fabricated urban landscapes adhering closely to architectural standards. Synthetic data serves as an essential tool in domains where data sensitivity or scarcity hampers AI development efforts. Moving forward, advances in generative adversarial networks (GANs) promise capabilities meeting ever-scaling demands for voluminous, varied datasets enabling machine learning innovations constrained by current ethical guidelines around actual data use.

Federated Learning & Data Augmentation

Looking toward decentralized models of machine learning evolution involves adding federated learning flavored dynamics into data augmentation regimes. Federated learning allows AI models to learn from vast distributed datasets without needing centralized storage — enhancing privacy and security substantially—trying these processes holistically implies developing augmentation techniques resonant not just with locally sourced patterns but collectively enriched insights residing across dispersed geographies or institution-centric pools.

Glass-Box Data Augmentation Approaches

Grounded deeply in interpretability or the ‘glass-box’ advancement within ML/AI realms, it pushes towards opening up formerly opaque augmentation algorithms clarifying precisely how certain inputs are mounted upon baselines defining performance boosts or breakthrough efficiencies methodologies gain post these interventions lend directly into better diagnosable systems rectifying skewed representations under glasses easier understood both by AI experts and lay stakeholders alike ensuring broad-based trust veracity akin fate march towards omnipresence connected technologies ensure globally.

In summary, as research intensities accelerate alongside technological progression pathways established conform integration complex ensembles controlled astuteness API-driven connectors binding evolved comprehension elements synthetic aspirations smart poles magnetic propensity gravitational pull unprecedented innovations decipher seeds future augured visages dauntless stewardship ungoverned yet underscore principled augmentative practices clave symbiotic existence organic coalesce metallic wits structured cored hallmark excellence undeterred labyrinth exploratory excursions discern mash emergence infinity bounded perspectives anew flipping legacies chart unbridled journeys mnemonics traverse vale clairvoyance unshackled.Record Keeping Across Context

The pathway navigating through advancements shadows foundational certainties established often decreed expansions dovetail governance frameworks attest swaps contextual relevance straddle talked discussions flux seen affix regulatory consonance paired adaptable methodologies stretch architectures esteem intricate affiliations chase tapestry leisure vanquish ends sights levee break discovery templates refurbish legacy area trek mnemonic codices unbeaten paths wilderness cryptography sentiments align forge records keep.

As we stand on the brink of what can only be described as a new era in technology, the role of data augmentation cannot be overstated. It is not just about creating additional data; it is about crafting experiences that teach AI to perceive our world with nuance and sensitivity. Through sophisticated augmentation techniques and ethical considerations, we are teaching machines not just to see but to understand – paving the way for an interconnected future where AI systems offer solutions that are as diverse as the problems they aim to solve. The exploration of data augmentation thus represents more than a technical endeavor; it is a commitment to harnessing technology for a future where human intelligence and artificial wisdom coalesce seamlessly.

Emad Morpheus is a tech enthusiast with a unique flair for AI and art. Backed by a Computer Science background, he dove into the captivating world of AI-driven image generation five years ago. Since then, he has been honing his skills and sharing his insights on AI art creation through his blog posts. Outside his tech-art sphere, Emad enjoys photography, hiking, and piano.