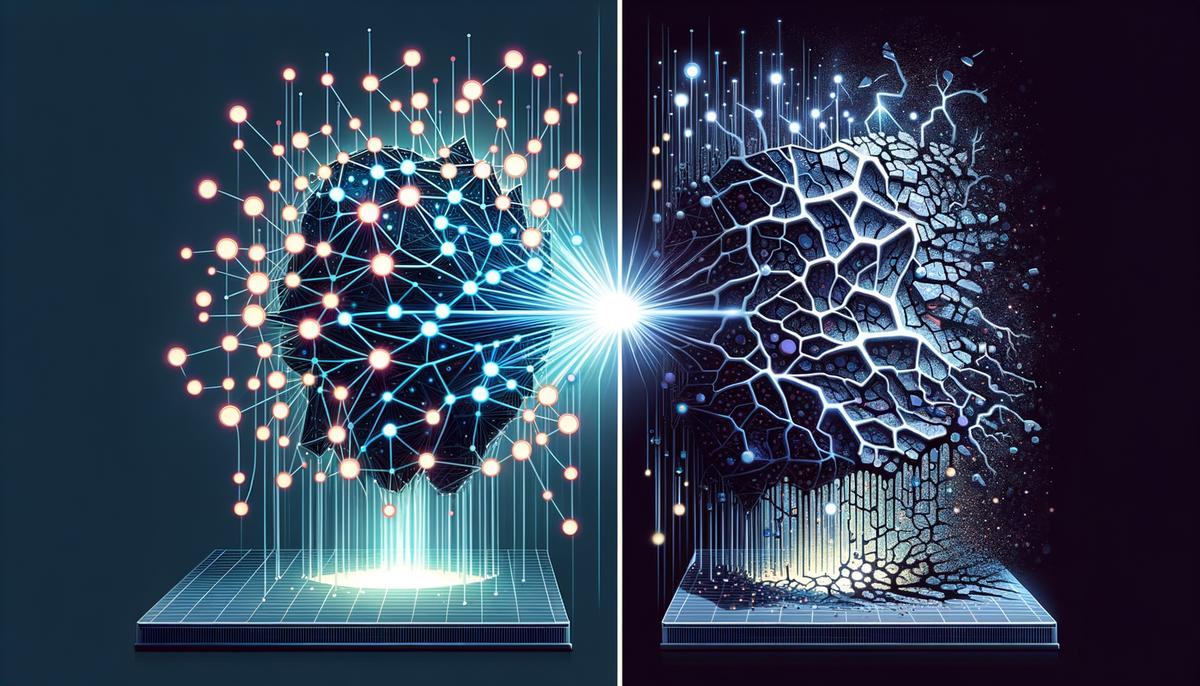

Data leakage in machine learning is a subtle yet significant issue that can dramatically skew the performance of models, leading to overoptimistic results that fail to hold up in real-world applications. This phenomenon, akin to having the answers before the test, presents a challenge that requires careful attention and specific strategies to address effectively. By understanding the nuances of how data leakage occurs and its implications, we can better prepare ourselves to mitigate its effects and develop more reliable and accurate machine learning models.

Contents

Defining Data Leakage

Data leakage in machine learning is a sneaky troublemaker, quietly slipping information from outside the training dataset into the creation of the model. Think of it as cheating on an exam by having the answers in advance; it might seem like you’re doing well, but it doesn’t truly test your knowledge. Similarly, when a model benefits from data leakage, its performance seems impressively high on training data—but when faced with real-world data, it stumbles.

One classic example of data leakage occurs during the preprocessing phase. Imagine you’re normalizing or standardizing your dataset as a whole before splitting it into training and testing sets. By doing so, information from the test set—a no-go zone during training—unintentionally sneaks into the model. This is akin to learning words from a test you’re not supposed to see before the big day; it gives the model an unfair advantage, not truly earned.

Another sneaky spot for data leakage is in time-series analysis. Let’s say you’re predicting future stock prices based on past performance. Incorporating future information into past data—such as using tomorrow’s price to predict today’s—turns the whole exercise into a charade. It’s like using a time machine to bet on yesterday’s game knowing today’s outcome—it sure sounds like a winning strategy, but it’s far from fair play.

Moreover, feature selection procedures can also be culprits of data leakage. If you’re examining the entire dataset to select features before splitting it into train and test sets, congratulations, you’ve just let the cat out of the bag early. This premature peep into the data muddies the waters, making the model think it knows the script when it actually doesn’t.

In addition, improper use of validation strategies might lead to data leakage too. For instance, applying cross-validation without carefully segregating blocks of data can result in mixing training and testing data. It’s akin to practicing for a play with the script in hand only to falter when performing live without it—the rehearsal didn’t truly prepare you for the real deal.

To trot out an example, let’s consider predictive healthcare models. If patient data includes diagnostic results that wouldn’t be available at the time of prediction in real life, but are used to train the model, that’s a classic case of looking into the crystal ball with hindsight. The model, inflated with confidence from knowing outcomes ahead of their prediction, will likely falter in real-world applications where such knowledge isn’t readily available.

Crucially, identifying and remedying data leakage requires vigilance and a keen eye for detail. Spotting these leaks often involves tracing the flow of data through preprocessing, training, and validation phases, ensuring that each step is impermeable to future information or cross-contamination between training and test sets.

In preventing data leakage, partitioning your data correctly before any kind of processing is key. Ensuring that models are trained strictly on designated training sets and validated effectively can seal these leaks. By adhering to rigorous validations and separation protocols, machine learning practitioners can safeguard their models against the sly slip of data leakage, ensuring their creations can truly perform in unknown waters—just like rehearsing without peaking at the final act to genuinely captivate upon the stage curtain’s rise.

Identifying Types of Data Leakage

Data preparation stands out as a critical phase where data leakage often slips through cracks. A striking illustration of this is when the scaling process involves the entire dataset rather than being applied separately to training and testing sets. This oversight ensures that information from the test set sneaks into the training phase, thereby inflating performance metrics unrealistically. The whole premise of scaling is to normalize data features so that one does not dominate the others. However, when scaling encompasses both sets collectively, the model inadvertently gains insight into the test set, creating a scenario where the model is not genuinely learning but rather recalling.

Turning the spotlight on model evaluation reveals another arena for potential data leakage: the misuse of validation sets. The cardinal rule of splitting data into training, validation, and test sets is to simulate real-world scenarios where the model encounters unseen data. However, if the validation set influences the model adjustment phase too significantly—by being repeatedly used for tuning model parameters—the model could become overly optimized for this specific set rather than for general prediction tasks. Such misuse blurs the line between genuine predictive accuracy and overfitting, making the model’s performance appear stellar in the controlled environment but potentially lackluster in practical application.

Drilling down further, these two types of data leakage—during data preparation and model evaluation—manifest through subtle yet impactful ways. For instance, leaking future information in time-series datasets during scaling or validation not only defeats the purpose of forecasting but also gives a false sense of security about the model’s predictive power. Equally, over-reliance on model adjustments based on validation set performance can lead model developers down a rabbit hole of endless tweaking that brings more harm than good.

Understanding these nuances underscores the importance of vigilance throughout the machine learning pipeline. It serves as a reminder that data leakage is not just about large, glaring errors but can also arise from small missteps that accumulate significant consequences. Approaching both data preparation and model evaluation with a clear strategy to partition and protect datasets will guard against the insidious effects of data leakage, ultimately fostering models that stand robust in theory and practice.

Consequences of Data Leakage

Data leakage can make a machine learning model look like a star in training but a dud in practice, leading to inflated confidence in its predictive power. For instance, models guilty of data leakage often present strikingly high accuracy scores during the testing phase; demonstrating what seems to be an uncanny ability to predict outcomes. This illusion shatters when applied to new, real-world data, where the performance plummets dramatically because the model actually learned from information it wouldn’t have in a true forecast scenario.

A vivid example comes from the finance sector, where a trading algorithm might be developed to predict stock movements. If this model inadvertently incorporates future price information during its training phase, its accuracy in historical back-tests would be remarkably high. However, when deployed in real-time market conditions, its effectiveness is severely compromised since it effectively ‘knew’ of future events during training, a luxury devoid in real-time decision-making. The outcome? Potentially monumental financial loss and a loss of trust in predictive modeling capabilities.

Moreover, erroneous decision-making is an inevitable consequence of data leakage, impacting not just businesses but also people whose lives and well-being might depend on these predictions. In law enforcement, for example, predictive policing models aim to forecast crimes or recidivism rates. A model affected by data leakage might disproportionately flag certain demographics as high-risk inadvertently because of historical bias encoded within the leaked data. Consequently, it could perpetuate discriminatory practices and unfair scrutiny against particular groups, undermining justice and equity.

In the realm of healthcare, consider a medical diagnosis AI trained with patient data that unintentionally includes outcome data. Such models may appear exceptionally proficient in diagnosing diseases during validations. Yet, their real-world deployment can lead to false diagnoses, either by missing conditions they were trained to detect or by indicating diseases where there are none. The ramifications include unnecessary anxiety for patients, irrelevant treatments, or overlooked conditions that could have serious health implications.

Manufacturers eager to deploy predictive maintenance algorithms face similar perils with data leakage. Blindsided by the inflated accuracies of their predictive models, they might plan maintenance schedules based on flawed predictions, leading to either premature maintenance actions, hiking operational costs, or worse, a missed critical failure prediction that results in equipment breakdown, potentially halting production and incurring significant losses.

This problematic landscape underscores the criticality of robust model validation techniques capable of identifying and negating the impacts of data leakage. Besides the straightforward economic and operational repercussions, data leakage can erode confidence in machine learning models, deterring businesses and individuals from leveraging such technology for decision-making. Thus, addressing data leakage extends beyond technical fixes—it’s about preserving trust in machine learning as a reliable tool for future advancements.

Detecting Data Leakage

Detecting data leakage demands close scrutiny of every stage of the machine learning project, from data collection to model tuning. Auditing data handling processes plays a vital role in this endeavor. A deep examination of how data moves through the machine learning pipeline can uncover unintended leaks. For example, ensuring data sanitization practices are in place can reduce the risk of sensitive information accidentally slipping through into the model training phase.

Analyzing feature selection is another critical step. By reviewing the features used in the model, practitioners can identify if any data from the future, which shouldn’t be available at the time of prediction, is being incorrectly used. This involves rigorously checking each feature against the target variable to ensure they are temporally aligned.

Cross-validation methods serve as a powerful tool against data leakage, but they must be employed correctly. Traditional k-fold cross-validation might not be appropriate for time-series data due to the sequential nature of the information. Time-based splits can provide a more realistic evaluation of the model’s performance and help identify potential data leaks that occur when future information is inadvertently used in the model training process.

Tools such as scikit-learn in Python offer functionalities designed to help detect and prevent data leaks. For instance, pipelines in scikit-learn ensure that preprocessing steps like scaling and encoding are done within each cross-validation fold, rather than before cross-validation, thus preventing data from the test set from influencing the transformations applied to the training set.

Finally, engaging in continuous monitoring and reevaluation of machine learning models cannot be overstated. Data characteristics change over time; what was a perfectly leak-free setup at inception could potentially become vulnerable as new data flows into the system. Regularly reexamining the data handling and processing steps, adjusting cross-validation methodologies, and utilizing updated software tools are key practices in detecting new or previously overlooked instances of data leakage.

These actionable steps underscore the importance of a disciplined approach to detecting data leakage early in the model development process, securing the integrity and reliability of machine learning projects.

Preventing Data Leakage

Ensuring the proper management of data goes beyond initial preparations or selection techniques; it involves incorporating holistic data hygiene practices throughout the lifecycle of a machine learning project. Rigorous attention, specifically towards forming data management strategies that inherently minimize risks, is pivotal. To start with, embracing a rigorous approach to access control fundamentally blocks unauthorized peeking into datasets that could potentially lead to inadvertent data spills.

Partitioning data effectively cannot be overstated, as it’s one of the core precautions against data leakage. Utilizing techniques like k-fold cross-validation aids in assessing how the model will perform in an independent dataset. Meanwhile, advanced strategies such as stratified or group k-fold cross-validation are vital for preserving the original distribution of data.

It is imperative to separate training, validation, and testing datasets not just at a fundamental level but ensuring the methodology reflects real-world conditions thoroughly. Additionally, simulating blind test conditions can pinpoint unforeseen leakages that might not be apparent in standard validation approaches, especially critical in scenarios mimicking operational environments.

Leveraging domain knowledge for feature engineering is paramount. Beyond just enhancing model performance, it offers a protective layer against unintentional inclusion of predictive but inappropriate features. This endeavour demands a close dialogue between data scientists and domain experts to delineate and understand the boundaries of ethical and methodologically sound feature selection.

Pipeline architecture shines as a guardian against mixing data during preprocessing. By maintaining a clean separation of data flows, it acts as a sentinel, ensuring that information from the testing set does not bleed into the training process. Implementing automated checks within pipeline architectures can serve as an early warning system, highlighting potential data integrity issues.

Regular audits of data management and configuration practices emerge as a non-negotiable necessity. This periodic scrutiny ensures that the safeguards against data leakage are not only in place but are adaptively updated to face new challenges and technological advancements.

Transparency around data operations within teams can foster an environment where potential weaknesses are openly discussed and promptly addressed. Cultivating a culture where team members vigilantly look out for signs of data leakage can significantly bolster a project’s defenses.

Data leakage protection doesn’t conclude with the deployment of a model; it extends into continuous monitoring. Active oversight of model performance against newer datasets can unveil subtle leakages possibly overlooked during initial phases. Encountering an unexpected performance variance prompts a revisit to the drawing board to investigate and rectify causes potentially attributable to data leakage.

Making use of available tools and libraries specially designed to detect and prevent data leaks, such as those found in popular machine learning frameworks, equips practitioners with the means to systematically address vulnerabilities without hindering innovation. These tools, when integrated into development pipelines, serve as additional layers of defense, further securing machine learning models from the pitfalls of data leakage.

In conclusion, fortified by these methodologies, machine learning practitioners can alleviate the risks posed by data leakage. Through diligent application of these principles, the integrity and reliability of machine learning solutions are significantly bolstered, ultimately safeguarding the immense potential of this transformative technology.

In conclusion, the fight against data leakage is pivotal in the quest for creating machine learning models that perform reliably and accurately in real-world scenarios. The key takeaway is the importance of vigilance and appropriate data handling practices throughout the lifecycle of a machine learning project. By prioritizing these practices, we can significantly reduce the risk of data leakage, ensuring our models deliver on their promise without being misled by illusory performance metrics.

Emad Morpheus is a tech enthusiast with a unique flair for AI and art. Backed by a Computer Science background, he dove into the captivating world of AI-driven image generation five years ago. Since then, he has been honing his skills and sharing his insights on AI art creation through his blog posts. Outside his tech-art sphere, Emad enjoys photography, hiking, and piano.