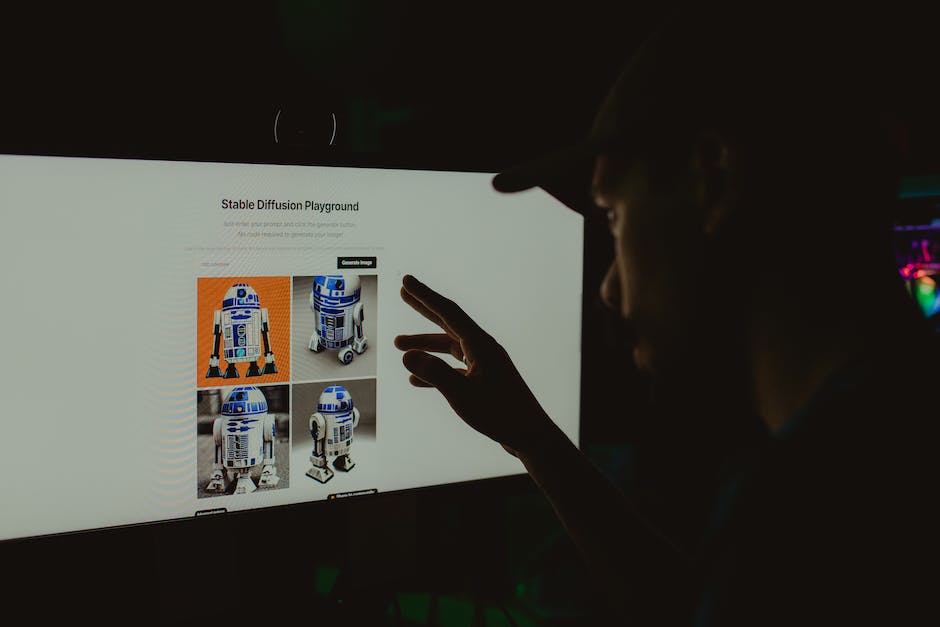

Delving into the field of deep learning, one might come across the vital concept of stable diffusion. This intrinsic mechanism significantly enhances the efficiency, accuracy and resilience of machine learning algorithms, thus playing a cornerstone role in this multidimensional domain.

The following discourse is tailored to equip students with profound knowledge of stable diffusion pertinent to deep learning, inclusive of its mathematical and technical aspects, roles and importances, variants, real-world applications, the challenges it poses, and the impending trends expected to surface in this field.

Contents

Fundamentals of Stable Diffusion

Understanding Stable Diffusion in Deep Learning

In the realm of deep learning, stable diffusion is a term often intricately associated with the efficient training of complex neural networks. Its significance can be traced back to its foundational role in ensuring predictability and continuity of the computational models used in deep learning. Simply put, stable diffusion symbolizes a system’s capacity to prevent variance inflation and mitigate between-layer co-adaptation.

At its core, stable diffusion is achieved by following a set of principles aimed at enhancing the stability of deep learning algorithms. It includes mechanisms such as gradient normalization, weight normalization, and batch normalization among others. These methods are collectively aimed at achieving a more predictable and stable flow of gradient information through the layers of a deep neural network during the backward propagation phase of learning.

Drawing from its mathematical foundations, the essence of stable diffusion emanates from normalizing the update step during the training process which is often guided by stochastic gradient descent algorithm in deep learning. By preventing radical and abrupt changes in weights, the system ensures a smoother journey down the complex, multidimensional error landscape that deep learning models navigate during the training phase.

Stable Diffusion and Gradient Flow

In terms of gradient flow – a core concept in stable diffusion – the objectives are to maintain the homogeneity in magnitude of gradients across different layers and to make sure that gradients flow backward without exploding or vanishing.

Exploding gradients can cause an overflow (NaN values), while vanishing gradients can make lower-layer units stagnant i.e., their parameters do not get updated effectively. Stable diffusion techniques maintain the flow of gradients for an effective training process.

A crucial part of maintaining stable diffusion is the normalization of weights. Theoretically speaking, an ideal neural network should have all its layers learn at the same pace for an overall homogeneity in the learning process. Through weight normalization, we ensure that each layer receives gradients of almost the same magnitude, promoting synchronized learning.

Stable Diffusion Techniques in Deep Learning

Different types of normalization techniques are used in deep learning to promote stable diffusion. Batch normalization is a popular algorithm that normalizes the input layer by adjusting and scaling the activations. It works by calculating the mean and variance of the batch’s inputs, then normalizing the batch’s inputs with those statistics.

Other normalization techniques such as Layer Normalization, Instance Normalization, and Group Normalization, all work on the same principle but with different scopes. Depending on the specific use case in deep learning, these normalization techniques can be selectively utilized to optimize training stability and neural network performance.

In the realm of deep learning models, stable diffusion emerges as the fulcrum that supports the creation of efficient and stable models. It does so by regulating the gradient flow, inhibiting drastic alterations in weights while ensuring uniformity across layers. These techniques are instrumental in crafting well-built, sustainable neural networks, pushing deep learning to new heights of innovation.

Role and Importance of Stable Diffusion in Deep Learning

Diving Deeper into Stable Diffusion in Deep Learning

Viewed as a cornerstone of deep learning, a specialized facet of machine learning, stable diffusion faithfully recreates the functionalities of human cognition to process data and to form patterns essential for decision-making. It’s a key player when it comes to improving efficiency and building trust in complex system models powered by machine learning algorithms.

The main advantage of stable diffusion lies in its pivotal role in reducing errors and augmenting predictive accuracy in the learning processes of these statistical models. It strategically discards volatility, which often leads to anomalies that could undermine the reliability of the results. By reducing model errors, it paves the way for more accurate forecasts, exponentially improving the overall performance of deep learning models.

Detangling Complex Network Structure and High-Dimensional Data

Deep learning algorithms often have to tackle data represented in complex, high-dimensional networks, which presents several challenges. This is where stable diffusion plays a critical role by helping to break down these complex systems, thereby assisting algorithms in understanding and learning from the data more easily and efficiently.

High-dimensional data often faces the ‘curse of dimensionality’, where the difficulties of handling and processing data increases with each added dimension. Stable diffusion acts as a constructive force in this context by mapping high-dimensional data onto a lower-dimensional subspace without significant loss of information.

Optimization of Weight Learning

In deep learning algorithms, the learning phase often involves tuning the weights assigned to different parameters until an optimal approximation of the real-world relationship has been made. Stable diffusion ensures that the weight learning process does not suffer from instability, where weights fluctuate wildly instead of converging to a stable solution.

Stable diffusion essentially smoothes out the learning phase’s irregularities, ensuring that the weights are optimized prudently and in a stable manner. This enhances the overall predictive capabilities of a deep learning model, contributing to the production of more credible outcomes.

Facilitator of Generalization in Deep Learning

Stable diffusion also facilitates generalization in deep learning, a fundamental capability of machine learning algorithms. Generalization refers to the ability of these algorithms to perform accurately on new, unseen data based on their learning from a training dataset.

Through stable diffusion, the issue of overfitting – where a model performs well on the training data but poorly on unseen data – is managed effectively. This stability in learning helps algorithms extrapolate patterns to unseen data effectively, reinforcing the model’s ability to generalize and thereby increase its real-world applicability.

Significance of Stable Diffusion in Deep Learning

Stable diffusion in deep learning algorithms significantly contributes to their accuracy, efficiency, and general performance. Its relevance ranges from weight optimization to assisting in the comprehension of complex network layouts and high-dimensional datasets. By improving the model’s ability to generalize and solidifying the learning phase, stable diffusion proves itself as an invaluable component in the arena of deep learning.

Types and Applications of Stable Diffusion

Elucidating Stable Diffusion in the Realm of Deep Learning

This pertains to a set of numerical techniques used particularly in machine learning systems for training deep models. The design of these methods involves the simulation of random movements that accommodate the high-dimensional character of the optimization problem at hand. Stable diffusion’s primary strength lies in its ability to withstand destabilizing variables or noise during the learning process. This, in turn, creates a much more consistent learning environment, thereby empowering deep learning models to better generalize and reach superior precision levels.

Types of Stable Diffusion

There are various types of stable diffusion strategies that come into play when training deep models. The two principal types include deterministic and stochastic diffusion. Deterministic diffusion, as suggested by the name, follows a predictable, prescribed path to minimize errors during the learning process. It is commonly used when dealing with regular structures and data for which random variations are minimal.

On the other hand, stochastic diffusion employs random variations in the learning process. This variant is best suited to handle unpredictable or irregular structures in the data, which may be subject to high levels of uncertainty or randomness. Stochastic diffusion tends to be more flexible, often leading to more robust models even when faced with ‘noisy’ data.

Further variants of diffusion strategies include homogeneous and inhomogeneous diffusion processes. The former maintains a constant diffusivity throughout the learning process, making it ideal for data structures of uniform complexity. The latter adjusts the diffusivity based on localized complexities in the data structure, thereby ensuring more accurate models when dealing with data featuring substantial irregularities or variances.

Applications of Stable Diffusion in Deep Learning

Stable diffusion has a plethora of applications in deep learning, underscored by its ability to create more accurate and robust models. In image processing tasks, such as segmentation, object detection, or recognition, stable diffusion is utilized to build robust models capable of handling various image scales and conditions.

In natural language processing, stable diffusion aids in understanding the semantic structures of text for better sentiment analysis, machine translation, or text generation. It’s also widely employed in speech recognition technologies, where it helps enhance the accuracy of transcriptions.

Stable Diffusion in Different Industries

Beyond the confines of academia, stable diffusion has proven influential in various industries. In healthcare, stable diffusion techniques are applied in areas like cancer detection, predicting disease patterns, and analyzing medical imaging data.

In the finance sector, it plays a vital role in risk management, where it’s used to optimize portfolios and predict market behaviors based on past patterns. In the automotive and aviation industry, stable diffusion methods are integral to advanced safety systems, such as those used in Autonomous Emergency Braking (AEB) and other autopilot features.

In essence, gaining knowledge on stable diffusion processes and the different types they encompass is invaluable in ensuring the accuracy and resilience of deep learning models. Therefore, a thorough comprehension of these methods is a critical element in a comprehensive exploration of stable diffusion in deep learning.

Challenges and Future of Stable Diffusion

Delving Into Stable Diffusion in Deep Learning

Stable diffusion in the field of deep learning is the process wherein the weights of the model are updated incrementally, thus avoiding abrupt alterations in the parameters of the model, which can often result in instability. This tactic aims to improve the model’s learning capability by reducing the chance of the model deviating from the desired outcome. At the foundation of stable diffusion lies the Stochastic Gradient Descent (SGD) method, a widely-used optimization strategy in the realm of machine learning. In this context, stability is upheld by carefully managing the learning rate and making alterations gradually and in a manageable manner.

Challenges in Implementing Stable Diffusion in Deep Learning

Despite the apparent advantages, integrating stable diffusion in deep learning is not without challenges. First, there exists a trade-off between stability and learning speed. While stable diffusion prevents drastic changes in model parameters, it inherently slows down the learning process as only small steps are taken towards reaching the minimum of the loss function.

Secondly, the process of choosing an appropriate learning rate for stable diffusion can be demanding. An excessively low learning rate might slow down the convergence speed, while a high learning rate might cause the learning process to become unstable.

Lastl, when scaling stable diffusion in deep learning to large datasets and complex models, efficiency becomes a substantial challenge. Larger models require more computational power and memory, making it necessary to employ strategies like parallel computing or batch processing. However, these approaches may complicate maintaining stability in the diffusion process.

Strategies to Confront the Challenges

To address these challenges, several strategies can be employed. Adaptive learning rate techniques like AdaGrad, RMSProp, and Adam can be used to dynamically tune the learning rates. These techniques adapt the learning rate based on the gradients’ behavior, ensuring an optimal learning rate is maintained for stable diffusion.

In terms of efficiency, the use of mini-batch gradient descent may be beneficial. This method uses a subset of the training data to compute the gradient, reducing computational complexity and memory requirements. However, a trade-off exists with the accuracy of the gradient estimates, and a balance must be struck.

Additionally, incorporating regularization techniques can improve model stability. Regularization methods such as L1 and L2 regularization add a penalty term to the loss function that aids in preventing overfitting and ensuring the model remains stable.

The Future of Stable Diffusion in Deep Learning

Looking ahead, the future of stable diffusion in deep learning rests on the development of advanced algorithms that can better handle the trade-offs between stability and learning speed, learning accuracy, and computational efficiency. Moreover, as deep learning models become increasingly complex, sophisticated regularization techniques must be developed to ensure overall model stability.

Parallel computing technologies will also play a pivotal role in mitigating efficiency issues. As computation technologies advance, more efficient ways of scaling up stable diffusion will become possible.

In conclusion, future research on stable diffusion in deep learning needs to focus on developing strategies that consider model complexity, learning speed, stability, and computational efficiency. These considerations are critical for optimizing deep learning models and expanding the applicability of these models in solving complex, real-world problems.

Ultimately, understanding stable diffusion in deep learning is a key facet in the journey of conquering this ever-evolving field. As we unravel and appreciate the potential ramifications of diffusive dynamics in complex machine learning, we also become cognizant of the challenges it presents.

The gripping discussions and strategies on navigating these obstacles, augmented by the survey of the future of stable diffusion, gear us towards a tomorrow where deep learning implementations become more efficient, resilient and revolutionizing.

Thus, knowing stable diffusion in the deep learning context is not just another compass to navigate the field, but it’s an impetus that propels deep learning into uncharted territories.

Emad Morpheus is a tech enthusiast with a unique flair for AI and art. Backed by a Computer Science background, he dove into the captivating world of AI-driven image generation five years ago. Since then, he has been honing his skills and sharing his insights on AI art creation through his blog posts. Outside his tech-art sphere, Emad enjoys photography, hiking, and piano.