Delving into the world of machine learning and artificial intelligence reveals a myriad of intriguing concepts, one of which is Variational Autoencoders (VAEs). These tools, pivotal in the realm of deep learning, are not just another brick in the wall but hold a unique place due to their distinctive approach to generating new data from latent variables and probability distributions. As we explore the intricate architecture of VAEs, from the encoder and decoder to the loss functions that drive their functionality, we embark on a journey through the foundational theories that make VAEs an indispensable part of modern AI applications. From health care innovations to autonomous vehicle navigation and beyond, the applications of VAEs paint a picture of a technology that’s reshaping our world, despite facing challenges and limitations that spur ongoing research and development.

Contents

Foundations of Variational Autoencoders

Understanding the Theory Behind VAEs: Variational Autoencoders

Central to many advancements in machine learning, Variational Autoencoders (VAEs) stand out for their unique blend of neural network and statistical modeling. These powerful tools allow for the generation of new data that’s similar to the data they were trained on, making them crucial for tasks like image generation, data compression, and more. But what exactly are VAEs built upon? Let’s peel back the layers and delve into the theoretical foundations that make VAEs both fascinating and incredibly useful.

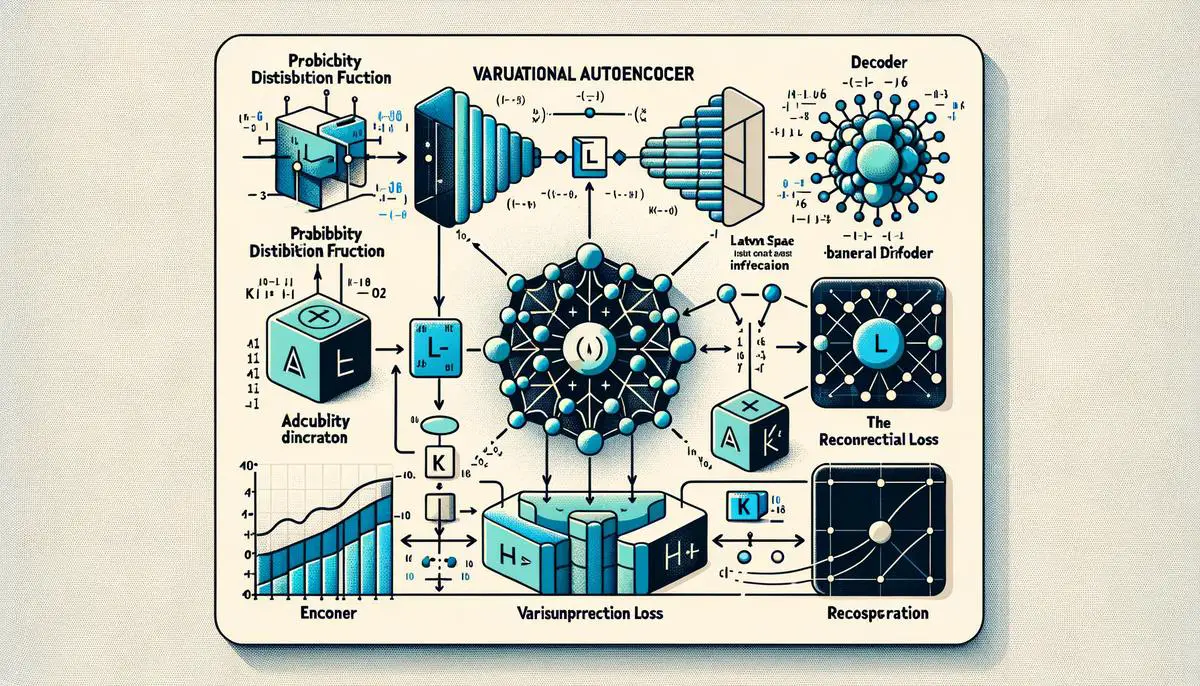

At its core, a VAE is grounded in probability theory and statistics, with a particular emphasis on Bayesian inference. This approach combines prior knowledge with new evidence to make more accurate predictions. In the context of VAEs, this means merging our understanding of how data might be structured (the prior) with actual observed data (the evidence) to generate new, unseen data points that share characteristics with the observed data.

Bayesian inference, however, is notoriously computationally intensive. VAEs tackle this challenge by introducing an approximation technique known as variational inference. This method simplifies the problem, making it more tractable for computers to handle. It does so by estimating the complex probability distributions involved in generating the data with simpler distributions. This approximation is where VAEs get their name – they variate through different probabilities to find the best match that can explain the observed data.

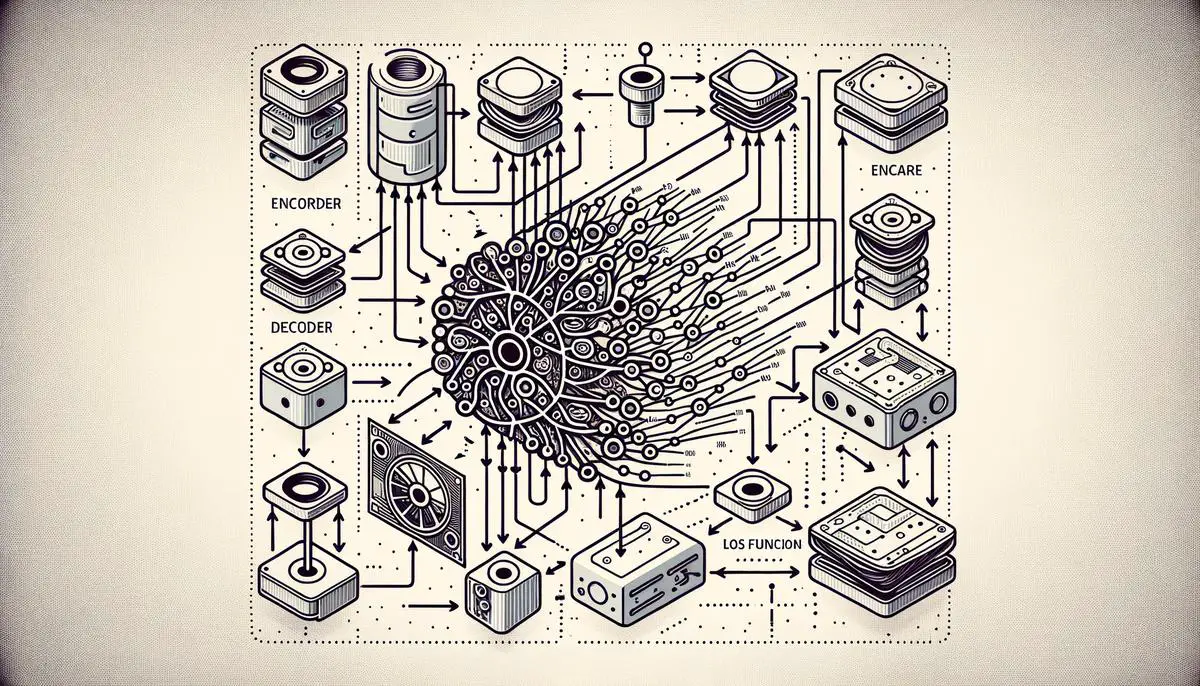

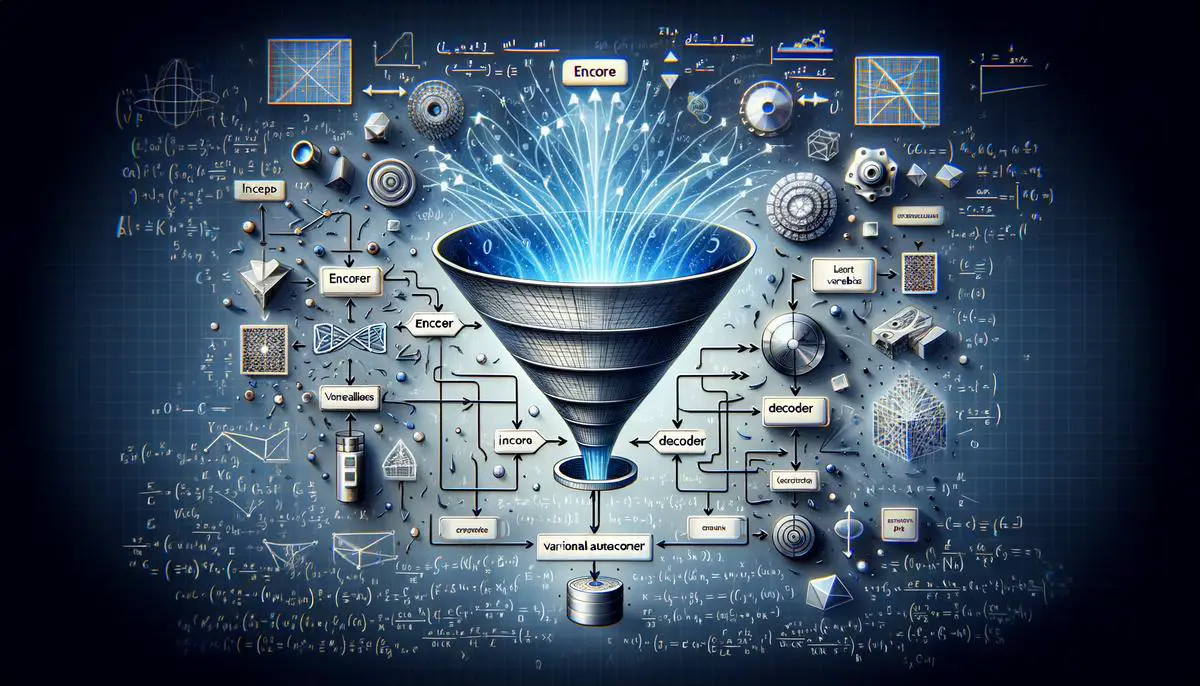

Another cornerstone of VAE theory is the concept of autoencoders. An autoencoder is a type of neural network designed to learn efficient representations of data, typically for dimensionality reduction. It has two main parts: an encoder, which compresses the input data into a smaller, dense representation, and a decoder, which reconstructs the data from this compressed form. The VAE extends this model by introducing a probabilistic spin to the encoding and decoding process, making the model not just learn a fixed representation, but a distribution over all possible representations. This allows VAEs to generate new data points by sampling from these distributions.

Deep learning, the broader field in which neural networks reside, further supports the operation of VAEs. The power of deep learning models, characterized by their layered structures, enables VAEs to handle and generate highly complex data such as images and text. By leveraging deep neural networks, VAEs can effectively capture the intricate patterns and variations within the data, allowing for more realistic and varied data generation.

Finally, optimization algorithms play a vital role in training VAEs, with the most common being backpropagation in conjunction with gradient descent. This combination allows for the efficient adjustment of the neural network’s parameters, ensuring the model can accurately learn to generate new data that mimics the original dataset.

In essence, the development and application of VAEs rest on a foundation built from the interplay of probability theory, statistical methods, neural networks, and optimization techniques. These theoretical underpinnings enable VAEs to not just model data, but to create new instances that expand our ability to understand and utilize the information hidden within our vast datasets. Whether it’s crafting new images that look like they could belong to a given collection of photographs or compressing data in ways previously unimagined, VAEs represent a significant step forward in our journey through the realms of artificial intelligence and machine learning.

Architecture of VAEs

Variational Autoencoders, commonly known as VAEs, are a fascinating blend of artificial intelligence and statistics, particularly shining in the field of unsupervised learning. They’re innovatively designed to not just learn the complexities of data, but also to generate new data that’s similar to what they’ve been trained on. Let’s peel back the layers of a VAE’s architectural design, focusing on elements that complement and build upon the foundational concepts already discussed.

The Architectural Blueprint

At the core of a VAE’s architecture lie two primary components: the encoder and the decoder. These elements work in tandem, connected by a clever mechanism that handles the probabilistic aspects of the model, ensuring the VAE stands apart from other types of autoencoders.

- Encoder: The journey begins with the encoder. Imagine this as a sophisticated funnel where input data (like images or text) goes in, and instead of a straightforward compressed representation, we get two things: means (μ) and variances (σ²). These represent the parameters of a Gaussian distribution, hinting at where the input data points sit in a latent space. This space is a lower-dimensional representation, ripe with potential but not yet ready to generate new data.

- Sampling: Between the encoder and decoder, a unique step takes place. Using the means and variances, the model samples points in the latent space. This process introduces randomness, an essential touch that allows the VAE to generate diverse and novel outputs. It’s this aspect of variational autoencoders that underlines their “variational” nature, leveraging probability to harness creativity.

- Decoder: Once a point is sampled, it travels to the decoder. Think of the decoder as the encoder’s reverse — a magical expanding funnel. Here, the sampled latent point is gradually upscaled back into the original data’s dimension. But instead of merely reconstructing the input, the decoder’s task is to construct something new yet similar, guided by the probabilistic coordinates provided by the sampled point.

- Reconstruction Loss & KL Divergence: To train a VAE effectively, it’s crucial to measure how well it performs, which is where reconstruction loss and KL divergence come into play. Reconstruction loss ensures that the output closely mimics the original input, maintaining fidelity to the data the model learns from. KL divergence, on the other hand, pushes the model to effectively use the latent space, ensuring it doesn’t just focus on replicating the input but also explores the creative possibilities within the latent dimensions.

Bringing It All Together

What makes VAEs truly captivating is how they balance the act of learning and creativity. By structurally encoding data into a latent space characterized by statistical parameters, then decoding from samples within this space, VAEs can both understand the data they’re fed and imagine new instances within the same realm. This ability finds profound applications across image generation, enhancing creative industries, and even in the development of more nuanced AI systems.

The Conclusion of the Beginning

In synthesizing the above elements — from encoding through to the imaginative leap in decoding — the architectural design of VAEs presents a harmonious blend of statistics, probability, and neural network theories. It’s an architecture not just built to analyze the past but to envisage potential futures, making it a cornerstone technology in the unfolding narrative of artificial intelligence.

Applications of VAEs

Understanding the Impact of Variational Autoencoders Beyond the Basics

Variational Autoencoders (VAEs) are taking the technology and creative sectors by storm, with applications that extend far beyond simple image generation. While the foundational elements such as probability theory, Bayesian inference, and deep learning offer a glimpse into the engine room of VAEs, the real intrigue lies in how these mechanisms translate into practical, industry-shifting applications. Let’s dive deeper into the multifaceted world of VAEs and explore their transformative impact across various industries.

Enhancing User Experience in Entertainment and Media

In the bustling world of entertainment and media, VAEs have found a sweet spot. Video streaming services, for example, use VAEs to power sophisticated recommendation engines. By analyzing viewing habits and comparing them with vast datasets, VAEs can predict which movies or TV shows a user is likely to enjoy. This is not just about matching genres but understanding the nuanced preferences of individual viewers, making recommendations more personalized and engaging.

Moreover, VAEs are revolutionizing the gaming industry by contributing to dynamic game development. They assist in creating more lifelike and responsive environments by generating diverse in-game assets and characters. This not only enriches the gaming experience but significantly reduces the time and resources needed for game development.

Driving Innovation in Healthcare

The healthcare sector is leveraging the power of VAEs to transform patient care and research. In medical imaging, VAEs are used to enhance the clarity and detail of images, such as MRIs and CT scans. This improvement in image quality supports more accurate diagnoses and treatment plans. Furthermore, VAEs are instrumental in genomics, where they help in understanding the complex patterns within DNA sequences. By analyzing genetic data, researchers can uncover new insights into diseases, leading to innovative treatments and personalized medicine.

Empowering Automation in Manufacturing

Manufacturing industries are harnessing VAEs to revolutionize their operations. Quality control, a vital aspect of manufacturing, has seen significant advancements with the integration of VAEs. These models can detect anomalies in products by analyzing images taken during the manufacturing process. This automation ensures high standards are maintained efficiently, reducing the need for manual inspections.

Additionally, VAEs play a crucial role in predictive maintenance. By monitoring equipment data, they can predict potential failures before they occur, minimizing downtime and saving costs. This ability to foresee issues enables companies to act proactively, ensuring a smooth manufacturing process.

Elevating Financial Services

The financial sector benefits from VAEs through enhanced security and fraud detection. VAEs analyze transaction patterns to detect anomalies that could indicate fraudulent activity. This proactive approach to security protects both institutions and customers from potential financial loss.

Moreover, VAEs contribute to more sophisticated risk management strategies. By analyzing market data, they can predict trends and identify potential risks, allowing financial institutions to make more informed decisions. This deep understanding of market dynamics supports better investment strategies and financial planning.

Conclusion

The versatility of Variational Autoencoders is evident in their wide-ranging applications across sectors. From personalizing user experiences in entertainment to pushing the boundaries of medical research, VAEs are at the forefront of technological innovation. Their ability to understand and generate complex data patterns not only enhances current processes but also paves the way for future advancements. As industries continue to explore the potential of VAEs, their impact is set to expand, promising exciting developments in the world of artificial intelligence and beyond.

Challenges and Limitations

Despite the promising applications and sophisticated mechanics of Variational Autoencoders (VAEs), integrating them into real-world systems presents several challenges. One of the primary obstacles is the computational demand. VAEs, with their two-fold structure of encoder and decoder networks, require significant computational resources for training. This aspect often necessitates advanced hardware, which may not be accessible to all developers, particularly those in startups or working on limited budgets.

Moreover, VAEs involve a delicate balance between the reconstruction loss and the Kullback-Leibler (KL) divergence. Striking this balance is crucial for the model to learn effectively without overfitting to the training data or losing its capacity to generate novel outputs. However, tuning these parameters to achieve the ideal balance is complex and can be time-consuming. It often requires iterative experimentation, which can further increase the computational costs.

Another challenge arises from the inherent randomness of VAEs. While this randomness is key to their ability to generate new and diverse data points, it can also lead to unpredictability in the quality of the outputs. Some generated samples may significantly diverge from the desired quality or realism, which is particularly problematic in applications demanding high fidelity, such as medical imaging or photorealistic rendering.

Data scarcity presents yet another hurdle. Effective training of VAEs, like many deep learning models, relies on vast amounts of data. In sectors where data is scarce, sensitive, or subject to strict privacy regulations, such as healthcare, gathering sufficient training data can be difficult. This limitation can hinder the model’s ability to learn effectively, resulting in poorer performance or generalization capabilities.

Lastly, the increasingly stringent privacy and ethical considerations pose challenges in implementing VAEs, especially in sectors dealing with personal or sensitive information. The potential for VAEs to generate realistic data poses critical concerns about privacy breaches and the creation of deepfakes. As a result, developers must navigate a complex landscape of regulations and ethical considerations when deploying VAEs, often requiring additional measures like differential privacy, which can complicate the model’s design and reduce its efficiency.

In conclusion, while VAEs offer remarkable capabilities in generating novel data and insights, their practical implementation is fraught with challenges ranging from computational demands and fine-tuning difficulties to data scarcity and privacy concerns. Overcoming these challenges is crucial for leveraging VAEs’ full potential and ensuring their responsible and effective use across various domains.

The Future of VAEs

Looking ahead, the journey of Variational Autoencoders (VAEs) is set to revolutionize several sectors further, with technology inching closer to replicating human-like creativity and decision-making processes. As we’ve seen the foundational principles and current applications, let’s delve into what the future might hold for advancements in VAE technology.

Integration with Emerging Technologies

As VAEs continue to mature, their integration with burgeoning technologies like quantum computing and blockchain could unlock unprecedented capabilities. Imagine VAEs operating on quantum computers, exponentially increasing their computational power and efficiency. This synergy could dramatically reduce the time required for complex data processing and generation tasks, making real-time data analysis and decision-making more tangible.

Advancing Personalization in Real-Time

VAEs are poised to take personalization to the next level. In the realm of streaming services and online shopping, VAEs could analyze user interactions in real-time, instantly generating and recommending content or products uniquely tailored to each user’s preferences and behavior. This level of personalization could redefine user experiences, making them more engaging and satisfying.

Solving Complex Social Challenges

The future of VAEs extends beyond commercial applications, with potential significant impacts on addressing complex social issues. For instance, in urban planning and environmental conservation, VAEs could simulate and predict the outcomes of various strategies, helping policymakers make informed decisions that balance development with sustainability. Similarly, in disaster response and management, VAE-generated models could predict disaster impacts, aiding in the creation of more effective evacuation plans and resource allocations.

Enhanced Security and Fraud Detection

Security systems and fraud detection mechanisms are set for a revolution with advancements in VAE technology. By generating synthetic data that mirrors patterns of fraudulent activities, VAEs can train predictive models to identify and prevent potential threats with higher accuracy. This would not only bolster cybersecurity but also ensure the integrity of financial transactions and personal data.

Limitations and Ethical Considerations

Despite the promising future, advancements in VAEs are not without challenges. The technology’s reliance on vast amounts of data raises concerns about privacy and data protection. Ensuring that VAEs are developed and used ethically, with a commitment to safeguarding personal information, will be paramount. Additionally, addressing the environmental impact of the increased computational demand is crucial for sustainable advancement.

In conclusion, the path forward for Variational Autoencoders is brimming with possibilities. From revolutionizing personalization to contributing to the greater social good, VAEs are positioned to be at the forefront of the next wave of technological innovation. However, balancing the potential with mindful consideration of ethical implications and limitations will be key to unlocking a future where VAE technology enhances lives without compromising values or well-being.

Through this exploration, it becomes evident that Variational Autoencoders are more than just an AI concept; they’re a bridge to a future where the generation of new, unprecedented data becomes seamless and integrated across various sectors. As we ponder the advancements on the horizon, from improved architectures to enhanced learning algorithms, the impact of VAEs is poised to expand even further. The journey of understanding and innovating with VAEs is ongoing, and the promise they hold for addressing complex problems while pushing the boundaries of what machines can learn and create underscores their importance in the evolving narrative of artificial intelligence.

Emad Morpheus is a tech enthusiast with a unique flair for AI and art. Backed by a Computer Science background, he dove into the captivating world of AI-driven image generation five years ago. Since then, he has been honing his skills and sharing his insights on AI art creation through his blog posts. Outside his tech-art sphere, Emad enjoys photography, hiking, and piano.