It is possible to use Stable Diffusion Online in your browser. This Stable Diffusion Web User Interface Guide was made by AI Image Generator professionals and enthusiasts s is available here.

Keep reading to learn how to use Stable Diffusion for free online.

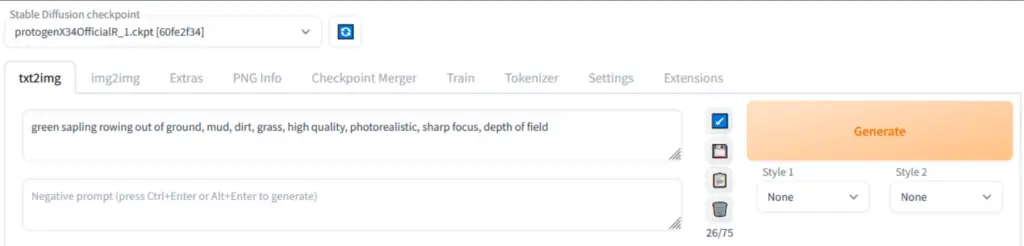

AUTOMATIC1111, often abbreviated as A1111, serves as the go-to Graphical User Interface (GUI) for advanced users of Stable Diffusion. Largely due to an enthusiastic and active user community, this Stable Diffusion GUI frequently receives updates and improvements, making it the first to offer many new features for the free Stable Diffusion platform.

However, it’s important to note that AUTOMATIC1111 isn’t the most user-friendly software out there. Its advanced features can come across as complex for those new to it. Furthermore, comprehensive documentation on how to navigate and use the interface is somewhat lacking. Therefore, it might require some time and patience to master its intricacies and fully harness its capabilities.

Contents

- 1 What is a Stable Diffusion Web UI | Your Gateway to AI Image Generation in Your Browser?

- 2 Feature Rich Environment of Stable Diffusion Web UI Online

- 2.1 Original txt2img and img2img Modes:

- 2.2 One-Click Install and Run Script:

- 2.3 Outpainting and Inpainting:

- 2.4 Color Sketch and Prompt Matrix:

- 2.5 Stable Diffusion Upscale:

- 2.6 Attention Mechanism:

- 2.7 Loopback Feature:

- 2.8 X/Y/Z Plot:

- 2.9 Textual Inversion:

- 2.10 Flexible Embeddings:

- 2.11 Half Precision Floating Point Numbers:

- 2.12 Train Embeddings on 8GB:

- 2.13 The Extras Tab: Expanding Possibilities with Additional Tools of Stable Diffusion Web UI

- 2.14 Resizing Aspect Ratio Options in Stable Diffusion Web UI:

- 2.15 Sampling Method Selection and Noise Settings:

- 2.16 Interrupt Processing at Any Time:

- 2.17 Support for Lower-Spec Video Cards:

- 2.18 Correct Seeds for Batches:

- 2.19 Live Prompt Token Length Validation:

- 2.20 Save and Utilize Generation Parameters of Stable Diffusion Web UI:

- 2.21 Read Generation Parameters Button:

- 2.22 Settings Page:

- 2.23 Running Arbitrary Python Code from UI:

- 2.24 Mouseover Hints:

- 2.25 Changeable UI Element Values:

- 2.26 Tiling Support:

- 2.27 Progress Bar and Live Image Generation Preview:

- 2.28 Separate Neural Network for Previews:

- 2.29 Negative Prompt:

- 2.30 Styles:

- 2.31 Variations:

- 2.32 Seed Resizing:

- 2.33 CLIP Interrogator:

- 2.34 Prompt Editing:

- 2.35 Batch Processing:

- 2.36 Img2img Alternative:

- 2.37 HighRes Fix:

- 2.38 Reloading Checkpoints on the Fly:

- 2.39 Checkpoint Merger:

- 2.40 Custom Scripts:

- 2.41 Composable-Diffusion:

- 2.42 No Token Limit for Prompts:

- 2.43 DeepDanbooru Integration:

- 2.44 Xformers:

- 2.45 History Tab via Extension:

- 2.46 Generate Forever Option:

- 2.47 Training Tab:

- 2.48 Preprocessing Images:

- 2.49 Clip Skip, Hypernetworks, and Loras:

- 2.50 Load Different VAEs:

- 2.51 Estimated Completion Time:

- 2.52 API and Inpainting Model Support:

- 2.53 Aesthetic Gradients via Extension:

- 2.54 Support for Stable Diffusion 2.0 and Alt-Diffusion:

- 2.55 Load Checkpoints in safetensors Format:

- 2.56 Eased Resolution Restriction:

- 2.57 UI Reordering:

- 2.58 License:

- 3 Getting Started with the Stable Diffusion Web UI Online:

- 4 Online services compatible with the Stable Diffusion Web UI

- 5 Installation of Stable Diffusion Web UI Online on Windows 10/11 with NVIDIA GPUs Using Release Package:

- 6 Automatic Installation of Stable Diffusion Web UI Online on Windows:

- 7 Automatic Installation of Stable Diffusion Web UI Online on Linux:

- 8 Credits and Acknowledgments:

- 9 Frequently Asked Questions about Stable Diffusion UI Online

- 9.1 Q: What is the Stable Diffusion UI Online?

- 9.2 Q: What are the key features of Stable Diffusion Web GUI Online?

- 9.3 Q: How can I install Stable Diffusion Web UI Online?

- 9.4 Q: What are the system requirements for running Stable Diffusion Web UI Online?

- 9.5 Q: What are some of the extra configuration options available for Stable Diffusion UI Online?

- 9.6 Q: What should I do if I encounter issues while running Stable Diffusion Web UI Online?

- 9.7 Q: Can I use Stable Diffusion UI Online on online platforms like Google Colab?

- 9.8 Q: Who deserves credit for the Stable Diffusion UI Online?

- 9.9 Q: What are the various online services that support Stable Diffusion UI Online?

- 9.10 Q: What’s the process for installing Stable Diffusion Web UI Online on Windows 10/11 with NVidia-GPUs using the release package?

- 9.11 Q: What’s the process for installing Stable Diffusion UI Online on Linux?

- 9.12 Q: How can I manually install Stable Diffusion Web UI Online?

- 9.13 Q: What are the dependencies required to run Stable Diffusion Web UI Online?

- 9.14 Q: Can I use Stable Diffusion Web UI Online on Windows 11’s WSL2?

- 9.15 Q: Can I change the default configuration of the Stable Diffusion UI Online?

What is a Stable Diffusion Web UI | Your Gateway to AI Image Generation in Your Browser?

Discover the power of AI image generation with the Stable Diffusion Web UI. This browser interface is built on the robust Gradio library, and it seamlessly integrates the functionality of Stable Diffusion into a user-friendly online platform. With this online AI image-generating tool, the complex world of AI image generation becomes easily accessible, directly in your web browser.

The Stable Diffusion Web GUI eliminates the need for multiple applications, providing an all-in-one solution for hobbyists, enthusiasts, and professionals.

Feature Rich Environment of Stable Diffusion Web UI Online

Original txt2img and img2img Modes:

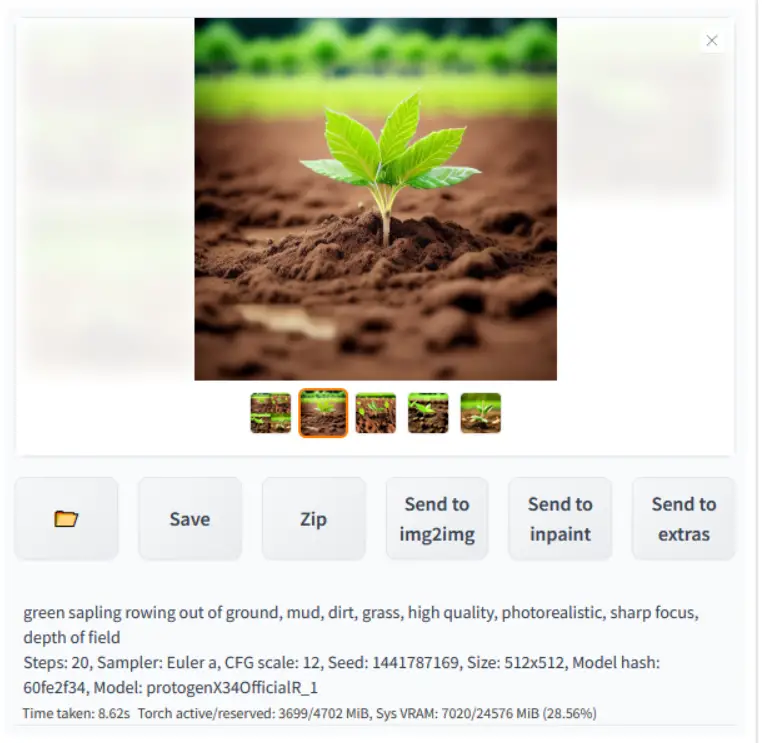

The Stable Diffusion Web GUI faithfully incorporates the foundational modes of AI image generation – text-to-image (txt2img) and image-to-image (img2img). With txt2img, users can generate images from textual descriptions, providing a handy tool for visualizing ideas. The img2img mode, on the other hand, allows for the transformation of one image into another, offering a new dimension to image manipulation.

One-Click Install and Run Script:

The platform prioritizes user convenience. The one-click install and run script minimizes setup time, enabling you to dive right into image generation. However, remember to have Python and Git pre-installed on your system to ensure a smooth start.

Outpainting and Inpainting:

These features open up a new realm of creativity. Outpainting, also known as image extrapolation, extends the content of an image beyond its original boundary, whereas inpainting, also known as image restoration, fills in the missing or corrupted parts of an image. Both of these features offer exciting possibilities for image editing and restoration.

Color Sketch and Prompt Matrix:

The Color Sketch feature allows you to turn your images into vibrant, sketch-like renditions. The Prompt Matrix, a unique offering of the Stable Diffusion Web GUI, enables multi-dimensional modifications, allowing you to manipulate your images in a variety of creative ways.

Stable Diffusion Upscale:

The platform offers superior image quality with the Stable Diffusion Upscale feature. This tool lets you enhance the resolution of your images while maintaining their original characteristics, leading to high-quality results even when working with low-resolution inputs.

Attention Mechanism:

The attention mechanism is a revolutionary feature that allows the model to focus more on certain parts of the text. For instance, “a man in a ((tuxedo))” will make the model pay more attention to “tuxedo”. This syntax can be easily manipulated using keyboard shortcuts, making it a highly interactive and intuitive feature.

Loopback Feature:

The loopback feature allows you to run the image-to-image processing multiple times. This can result in intricate and detailed results, providing a powerful tool for artists and designers who wish to push the boundaries of traditional image manipulation.

X/Y/Z Plot:

With the X/Y/Z Plot feature, you can create a three-dimensional plot of images with varying parameters. This can provide a unique perspective on the transformations occurring in the AI image generation process.

Textual Inversion:

The Textual Inversion feature adds a novel twist to image generation. It allows you to generate images that represent the opposite or an inverted concept of a given text prompt, offering a fascinating way to explore the capabilities of AI in interpreting abstract concepts.

Flexible Embeddings:

Stable Diffusion Web GUI offers flexibility with embeddings, letting you have as many as you need and name them as you wish. You can also use different numbers of vectors per token, providing a high level of customization to meet your specific needs.

Half Precision Floating Point Numbers:

Efficient computational resource usage is critical in AI image generation. With the ability to work with half precision floating point numbers, the Stable Diffusion Web GUI can deliver high performance even on systems with limited resources.

Train Embeddings on 8GB:

The platform is optimized to work efficiently with systems having 8GB of RAM. There have even been reports of successful training on systems with as little as 6GB of RAM. This feature makes the Stable Diffusion Web GUI a suitable tool for a wide range of users, regardless of their system specifications.

The Extras Tab: Expanding Possibilities with Additional Tools of Stable Diffusion Web UI

The Stable Diffusion Web GUI’s Extras tab hosts a collection of advanced tools, enhancing its capabilities beyond the standard AI image generation:

- GFPGAN: This specialized neural network is designed for facial image enhancement. By effectively fixing issues in face images, GFPGAN provides users with an effective tool for improving facial details in their AI image generation tasks.

- CodeFormer: As an alternative to GFPGAN, CodeFormer offers another powerful solution for face restoration. Users can choose between these tools based on their specific needs and preferences.

- RealESRGAN and ESRGAN: These neural network upscalers serve to enhance the resolution of generated images. RealESRGAN provides a general upscaling solution, while ESRGAN comes equipped with numerous third-party models, offering a variety of styles and techniques for upscaling.

- SwinIR and Swin2SR: These are additional neural network upscalers available to users. SwinIR and Swin2SR offer unique capabilities in image upscaling, giving users more options for enhancing their image generation outcomes.

- LDSR: The Latent Diffusion Super Resolution (LDSR) feature is an advanced tool for upscaling, adding another layer of versatility to the platform.

The Extras tab’s inclusion of these sophisticated tools makes the Stable Diffusion Web GUI a comprehensive, feature-rich platform for AI image generation, offering users the flexibility to choose the tools that best suit their specific tasks.

Resizing Aspect Ratio Options in Stable Diffusion Web UI:

This feature enables users to adjust the aspect ratio of their generated images, allowing for specific dimensions to match their unique requirements.

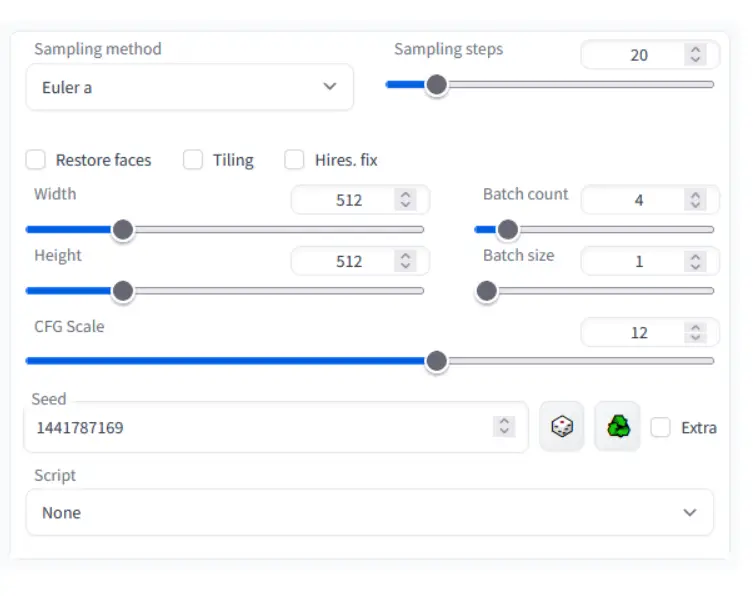

Sampling Method Selection and Noise Settings:

Users have the flexibility to select the sampling method best suited to their needs. Furthermore, they can adjust the ‘eta’ values acting as a noise multiplier in the sampling process. Additionally, the platform provides advanced noise setting options for a more granular control over the randomness and diversity in the output images.

The Stable Diffusion Web GUI is equipped with several features designed to enhance the user experience and accommodate a range of system specifications:

Interrupt Processing at Any Time:

This feature provides users with the flexibility to halt the image generation process whenever required. Whether to make adjustments or to switch tasks, you have complete control over the operations.

Support for Lower-Spec Video Cards:

The Stable Diffusion Web GUI is designed to be accessible, even to users with lower-spec video cards. While it supports 4GB video cards, there have also been successful reports of operation with 2GB cards, making the platform suitable for a broad range of systems.

Correct Seeds for Batches:

The platform ensures accurate seed values for batches, enhancing the reliability of the output. This feature helps maintain consistency in the image generation process across different batches.

Live Prompt Token Length Validation:

This real-time validation feature checks the token length of the input prompt as it’s being entered. This immediate feedback can help users avoid potential errors and streamline the image generation process.

These features, built into the Stable Diffusion Web GUI, not only facilitate an efficient image generation process but also ensure a user-friendly experience, making it a go-to platform for AI image generation enthusiasts.

Save and Utilize Generation Parameters of Stable Diffusion Web UI:

Saving Generation Parameters:

The parameters you use to generate images are saved along with the image. This information is stored in PNG chunks for PNG images and in EXIF for JPEGs. This feature ensures that you always have access to the parameters that led to a particular output.

Restoring Generation Parameters:

You can drag the image to the PNG info tab to restore the generation parameters. The parameters are then automatically copied into the UI, enabling you to reproduce or modify a past output with ease.

Disabling Parameter Saving:

If you prefer not to save the parameters with your images, this feature can be disabled in the settings.

Drag and Drop to Promptbox:

You can drag and drop an image or text parameters directly into the promptbox. This allows for quick and efficient input, streamlining your workflow.

Read Generation Parameters Button:

This button loads the parameters present in the promptbox into the UI. This allows for quick loading of parameters, saving you the trouble of manual input.

Settings Page:

The settings page provides a space for adjusting various preferences and controls according to your needs, allowing you to customize the user experience.

Running Arbitrary Python Code from UI:

For advanced users, the platform offers the capability to run arbitrary Python code directly from the UI. This feature, which must be enabled with the –allow-code command, provides a high degree of flexibility and control over the image generation process.

Mouseover Hints:

To assist users in navigating the platform, mouseover hints are provided for most UI elements. This guidance helps users understand the function of each element, improving usability and learning.

Changeable UI Element Values:

The platform allows for the customization of default, min, max, and step values for UI elements via text config. This feature provides a high degree of customization, enabling the platform to be tailored to individual user needs.

Tiling Support:

The platform features a checkbox for creating images that can be tiled like textures. This feature can be particularly useful for graphic designers and artists who need patterned images.

Progress Bar and Live Image Generation Preview:

The platform features a progress bar that gives real-time updates on the image generation process. This is complemented by a live image generation preview, allowing you to see your image taking shape in real time. These features ensure you are always aware of the status of your image generation task and can make adjustments as necessary.

Separate Neural Network for Previews:

To reduce VRAM and compute requirements, the platform can use a separate, less resource-intensive neural network to produce the live previews. This ensures that even users with lower-spec systems can benefit from the live preview feature without significantly impacting performance.

Negative Prompt:

This extra text field in the Stable Diffusion UI Online allows you to specify what you do not want to see in the generated image, giving you more control over the output.

Styles:

This feature in the Stable Diffusion UI Online enables you to save parts of a prompt and apply them later via a dropdown menu, streamlining the re-use of preferred styles.

Variations:

This capability of the Stable Diffusion UI Online generates slight variations of the same image, providing a way to explore different renditions of a concept.

Seed Resizing:

This feature allows you to generate the same image but at slightly different resolutions within the Stable Diffusion UI Online, providing flexibility in output size without changing the content.

CLIP Interrogator:

This button in the Stable Diffusion UI Online allows the system to guess the prompt from an image, offering a unique way to derive textual descriptions from visual content.

Prompt Editing:

This feature allows you to change the prompt mid-generation within the Stable Diffusion UI Online. For example, you can start generating an image of a watermelon and switch to an anime girl midway through the process.

Batch Processing:

This feature of the Stable Diffusion UI Online enables the processing of a group of files using the img2img feature, increasing efficiency when working with multiple images.

Img2img Alternative:

The Stable Diffusion UI Online offers a reverse Euler method for cross attention control, providing another option for image-to-image transformations.

HighRes Fix:

This convenience option within the Stable Diffusion UI Online allows you to produce high-resolution images in one click without the usual distortions, enhancing the quality of the outputs.

Reloading Checkpoints on the Fly:

This feature provides flexibility in the Stable Diffusion UI Online by allowing you to reload checkpoints during the image generation process.

Checkpoint Merger:

This capability of the Stable Diffusion UI Online enables you to merge up to three checkpoints into one, providing a way to combine different stages of the image generation process.

Custom Scripts:

The Stable Diffusion UI Online supports custom scripts with many extensions from the community, offering a wide range of additional capabilities to enrich your AI image generation tasks.

Composable-Diffusion:

The Stable Diffusion UI Online offers the innovative feature of Composable-Diffusion. This unique capability allows you to use multiple prompts at once, opening up new possibilities for creativity and complexity in your image generation tasks.

To use multiple prompts, simply separate them using an uppercase “AND”. For example, you could use “a cat AND a dog AND a penguin” to generate an image that includes all three elements.

To further refine your output, the Stable Diffusion UI Online supports weights for prompts. By assigning a numerical weight to a prompt, you can control the emphasis the model gives to that aspect of the image. For instance, in the prompt “a cat :1.2 AND a dog AND a penguin :2.2“, the model will give more emphasis to the cat and the penguin, with the penguin having the most emphasis due to its higher weight.

The Composable-Diffusion feature of the Stable Diffusion UI Online provides a powerful way to control and customize your AI image generation tasks.

No Token Limit for Prompts:

Unlike the original Stable Diffusion which allows up to 75 tokens, the Stable Diffusion UI Online removes this limit. This means you can use prompts of any length, providing unprecedented flexibility for your AI image generation tasks.

DeepDanbooru Integration:

For anime enthusiasts, the Stable Diffusion UI Online integrates with DeepDanbooru, creating Danbooru style tags for anime prompts. This makes it easier to generate anime-style images with the desired characteristics.

Xformers:

For users with select graphic cards, the Stable Diffusion UI Online offers a significant speed increase through the use of xformers. This can be enabled by adding –xformers to the command line arguments.

History Tab via Extension:

The Stable Diffusion UI Online also includes a handy history tab via an extension. This allows you to view, direct, and delete images conveniently within the UI, making it easier to manage your generated images.

Generate Forever Option:

For those who wish to continuously generate images, the Stable Diffusion UI Online includes a “Generate Forever” option. This feature allows the generation process to continue indefinitely, providing a constant stream of new images.

Training Tab:

The Training tab is a section of the Stable Diffusion UI Online dedicated to model training options. Here, you can customize hypernetworks and embeddings options, allowing for a high degree of control over your AI image generation model.

Preprocessing Images:

The Stable Diffusion UI Online also offers a variety of image preprocessing options. These include cropping and mirroring images, which can be useful for adjusting your images to the desired size and orientation before generation. Additionally, the platform supports autotagging using BLIP or DeepDanbooru (for anime), providing automatic generation of tags for your images based on their content.

Clip Skip, Hypernetworks, and Loras:

Clip Skip, Hypernetworks, and Loras are advanced features that provide additional flexibility and control over your image generation tasks. There’s also a separate UI where you can choose—with preview—which embeddings, hypernetworks, or Loras to add to your prompt.

Load Different VAEs:

From the settings screen, you can choose to load a different Variational Autoencoder (VAE), offering more customization options for your AI model.

Estimated Completion Time:

The progress bar includes an estimated completion time, providing real-time updates on the progress of your image generation task.

API and Inpainting Model Support:

The Stable Diffusion UI Online offers an API for integrating with other applications. It also supports a dedicated inpainting model by RunwayML, expanding its capabilities in image generation.

Aesthetic Gradients via Extension:

The platform supports an extension for Aesthetic Gradients, a method for generating images with a specific aesthetic using CLIP image embeds.

Support for Stable Diffusion 2.0 and Alt-Diffusion:

The platform is updated to support Stable Diffusion 2.0 and Alt-Diffusion, with instructions available in the wiki.

Load Checkpoints in safetensors Format:

The Stable Diffusion UI Online allows checkpoints to be loaded in safetensors format, providing more options for saving and loading models.

Eased Resolution Restriction:

The resolution restriction has been eased in the Stable Diffusion UI Online. Now, the dimensions of the generated image only need to be a multiple of 8, rather than 64.

UI Reordering:

The settings screen allows you to reorder elements in the UI, providing a more customized user experience.

License:

The Stable Diffusion UI Online now comes with a license, giving users clear terms of use.

NVidia GPU Installation & Run Guide for Stable Diffusion UI Online

Getting Started with the Stable Diffusion Web UI Online:

Before you begin using the Stable Diffusion UI Online, ensure that the required dependencies are met. There are instructions available that cater specifically to NVidia (recommended) and AMD GPUs, guiding you through the setup process.

Check the Stable Diffusion UI Dependencies Before Starting the Setup!

Before Setting Up the Stable Diffusion UI Online, check these required dependencies.

To get started with the Stable Diffusion UI Online, you’ll need to ensure that a few dependencies are installed on your system.

Here are the steps you need to follow:

Required Dependencies for Python 3.10.6 and Git:

These are essential for running the Stable Diffusion UI Online. You can download and install Python 3.10.6 and Git based on your operating system:

- Windows: Download and run the installers for Python 3.10.6

- (choose the web page,

- exe, or

- Win7 version)

- and Git.

- Linux (Debian-based): Run the following command:

sudo apt install wget git python3 python3-venv

- Linux (Red Hat-based): Run the following command:

sudo dnf install wget git python3

- Linux (Arch-based): Run the following command:

sudo pacman -S wget git python3

Required Dependencies to Code from this Repository:

You can obtain the code from the Stable Diffusion UI Online repository in two ways:

Preferred way:

Use Git to clone the repository with the following command:

git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui.git This method is recommended as it allows you to update the code simply by running

git pullAlternative way: Use the “Code” (green button) -> “Download ZIP” option on the main page of the repository. Note that Git still needs to be installed even if you choose this method. To update, you’ll have to download the ZIP file again and replace the files.

Optional Dependencies:

ESRGAN (Upscaling):

If you wish to use additional finetuned ESRGAN models, such as those from the Model Database, you may place them into the ESRGAN directory. Note that the ESRGAN directory doesn’t exist in the repository until you run it for the first time.

Models with the .pth extension will be loaded, and they will appear with their name in the UI.

Please remember that RealESRGAN models are not compatible with ESRGAN models, so do not download RealESRGAN models or place them into the directory with ESRGAN models.

With these dependencies installed and the code from the repository, you are ready to start generating images with the Stable Diffusion UI Online.

Check this guide about how to install and run Stable Diffusion on NVidia GPUs.

If you prefer not to install anything on your local machine, there’s an alternative! You can use online services such as Google Colab to access the Stable Diffusion UI Online. This provides an easy, no-installation-required approach to using this powerful AI image generation tool.

Online services compatible with the Stable Diffusion Web UI

Here is a list of online services compatible with the Stable Diffusion UI Online:

Running Stable Diffusion UI Online on Google Colab:

If you’d like to use Google Colab for running the Stable Diffusion UI Online, there are several notebooks maintained by the community that you can use. Google Colab allows you to run the application on a virtual machine in the cloud, saving you from having to install anything on your local machine.

Here are the links to various Google Colab Stable Diffusion notebooks:

- Notebook maintained by TheLastBen

- Notebook maintained by camenduru

- Notebook maintained by ddPn08

- Notebook maintained by Akaibu

- Original Colab notebook by AUTOMATIC1111 (outdated)

By using these notebooks, you can leverage the power of Google Colab’s GPUs to run the Stable Diffusion UI Online and generate images.

Running Stable Diffusion Web UI Online on Paperspace:

If you’re interested in using Paperspace, a powerful cloud computing platform, for running the Stable Diffusion UI Online, there’s a setup maintained by a community member that you can use.

Paperspace provides you with a virtual machine in the cloud, saving you from having to install anything on your local machine and providing you with powerful computing resources. By using this setup, you can leverage the capabilities of Paperspace to run the Stable Diffusion UI Online and generate images.

Running Stable Diffusion Web GUI Online on SageMaker Studio Lab:

For those who prefer to use Amazon’s SageMaker Studio Lab, a fully integrated development environment for machine learning, there’s a setup maintained by a community member that you can utilize.

SageMaker Studio Lab provides you with a cloud-based virtual machine, alleviating the need to install anything on your local system and offering you robust computing resources. By leveraging this setup, you can utilize SageMaker Studio Lab’s capabilities to run the Stable Diffusion UI Online and generate creative images.

Running Stable Diffusion GUI Online with Docker:

If you’re a fan of Docker for its ability to create isolated environments called containers, there are Docker setups maintained by community members that you can use to run the Stable Diffusion UI Online.

Here are the Docker setups:

By using Docker, you can run the Stable Diffusion UI Online in a container, ensuring that the dependencies of the application don’t interfere with your local system. This also makes it easy to start, stop, and restart the application.

Each of these features contributes to making the Stable Diffusion Web UI a comprehensive, powerful, and user-friendly tool for AI image generation in your browser.

Installation of Stable Diffusion Web UI Online on Windows 10/11 with NVIDIA GPUs Using Release Package:

Setting up the Stable Diffusion UI Online on Windows 10/11 systems with NVIDIA GPUs can be accomplished in just a few simple steps using the release package.

- Download and Extract: Download the release package

sd.webui.zipfrom v1.0.0-pre and extract its contents to a location of your choice on your system. - Update the Setup: Run the

update.batscript. This will ensure that you have the latest version of the Stable Diffusion UI Online. - Launch the Application: Finally, run the

run.batscript to start the Stable Diffusion UI Online. This will launch the application in your default web browser.

For more detailed instructions, refer to the Install and Run on NVIDIA GPUs guide.

By following these steps, you can quickly and easily get the Stable Diffusion UI Online up and running on your Windows 10/11 system with an NVIDIA GPU.

Automatic Installation of Stable Diffusion Web UI Online on Windows:

For those who prefer a hands-off approach, you can use the automatic installation process to set up the Stable Diffusion UI Online on your Windows system. Here are the steps:

- Install Python 3.10.6: Download and install Python 3.10.6. Make sure to check the “Add Python to PATH” option during the installation process. Please note that newer versions of Python may not support the torch library that Stable Diffusion requires.

- Install Git: Download and install Git, which is a version control system that will allow you to clone the Stable Diffusion repository.

- Clone the Repository: Download the Stable Diffusion repository by running

git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui.gitin a command prompt. - Run the Batch File: Finally, navigate to the directory where you cloned the repository and run

webui-user.batfrom Windows Explorer. Make sure to do this as a normal, non-administrator user.

By following these steps, you can automatically install and run the Stable Diffusion UI Online on your Windows system.

Automatic Installation of Stable Diffusion Web UI Online on Linux:

If you’re running a Linux system, you can follow these steps to automatically install and run the Stable Diffusion Web UI Online:

- Install the Dependencies: Depending on your Linux distribution, run the appropriate commands to install the necessary dependencies:

- Debian-based:

sudo apt install wget git python3 python3-venv - Red Hat-based:

sudo dnf install wget git python3 - Arch-based:

sudo pacman -S wget git python3

- Download and Install: Navigate to the directory where you want the web UI to be installed and execute the following command:

bash <(wget -qO- https://raw.githubusercontent.com/AUTOMATIC1111/stable-diffusion-webui/master/webui.sh). This will download and run a shell script that sets up the Stable Diffusion UI Online. - Run the Shell Script: Run

webui.shto start the Stable Diffusion UI Online. - Check for Options: For additional configuration options, you can check the

webui-user.shfile.

By following these steps, you can automatically install and run the Stable Diffusion UI Online on your Linux system.

Credits and Acknowledgments:

Licenses for borrowed code can be found in the ‘Settings -> Licenses’ screen of the Stable Diffusion UI Online, as well as in the html/licenses.html file.

We would like to express our gratitude to the following individuals and projects for their contributions, ideas, and inspirations:

- Stable Diffusion: CompVis/stable-diffusion, CompVis/taming-transformers

- k-diffusion: crowsonkb/k-diffusion

- GFPGAN: TencentARC/GFPGAN

- CodeFormer: sczhou/CodeFormer

- ESRGAN: xinntao/ESRGAN

- SwinIR: JingyunLiang/SwinIR

- Swin2SR: mv-lab/swin2sr

- LDSR: Hafiidz/latent-diffusion

- MiDaS: isl-org/MiDaS

- Ideas for optimizations: basujindal/stable-diffusion

- Cross Attention layer optimization: Doggettx/stable-diffusion, invoke-ai/InvokeAI

- Sub-quadratic Cross Attention layer optimization: Birch-san/diffusers#1, AminRezaei0x443/memory-efficient-attention

- Textual Inversion: rinongal/textual_inversion

- SD upscale: jquesnelle/txt2imghd

- Noise generation for outpainting mk2: parlance-zz/g-diffuser-bot

- CLIP interrogator: pharmapsychotic/clip-interrogator

- Composable Diffusion: energy-based-model/Compositional-Visual-Generation-with-Composable-Diffusion-Models-PyTorch

- xformers: facebookresearch/xformers

- DeepDanbooru: KichangKim/DeepDanbooru

- Sampling in float32 precision from a float16 UNet: Birch-san’s example Diffusers implementation

- Instruct pix2pix: timothybrooks/instruct-pix2pix

- Security advice: RyotaK

- UniPC sampler: wl-zhao/UniPC

- TAESD: madebyollin/taesd

- LyCORIS: KohakuBlueleaf

- Initial Gradio script: Posted on 4chan by an Anonymous user. Thank you, Anonymous user.

Our thanks extend to everyone who has contributed directly or indirectly to the Stable Diffusion Web UI Online project.

Frequently Asked Questions about Stable Diffusion UI Online

Q: What is the Stable Diffusion UI Online?

A: Stable Diffusion Web UI Online is a web-based interface built on the Gradio library for the Stable Diffusion tool. It’s designed for use by AI Image Generator tools enthusiasts, including hobbyists and professionals.

Q: What are the key features of Stable Diffusion Web GUI Online?

A: Stable Diffusion UI Online boasts a variety of features including the original txt2img and img2img modes, one-click install and run script, outpainting, inpainting, color sketch, prompt matrix, stable diffusion upscale, and many more.

Q: How can I install Stable Diffusion Web UI Online?

A: You can install Stable Diffusion Web UI Online by ensuring you have the necessary dependencies, including Python 3.10.6 and Git. The instructions for installing on NVidia GPUs, Windows, and Linux systems are provided in the above text.

Q: What are the system requirements for running Stable Diffusion Web UI Online?

A: While the exact requirements will vary based on the operations you’re performing, you’ll typically need a modern GPU with at least 4GB of VRAM (though some users have reported success with 2GB graphic cards). Ideally, 12 GB of RAM.

Q: What are some of the extra configuration options available for Stable Diffusion UI Online?

A: There are several configuration options that you may want to apply to the web UI. These include, but are not limited to, auto-launching the browser page after the UI has started, checking for new versions of the UI at launch, and others.

Q: What should I do if I encounter issues while running Stable Diffusion Web UI Online?

A: There are various troubleshooting strategies available depending on the issue you’re facing. These include options for handling GPUs with less VRAM, dealing with black or green images, and handling NansException.

Q: Can I use Stable Diffusion UI Online on online platforms like Google Colab?

A: Yes, there are maintained Google Colab notebooks that you can use to run Stable Diffusion UI Online.

Q: Who deserves credit for the Stable Diffusion UI Online?

A: The Stable Diffusion UI Online project has benefited from the contributions, ideas, and inspirations of many individuals and projects, as detailed in the ‘Credits and Acknowledgments’ section above.

Q: What are the various online services that support Stable Diffusion UI Online?

A: In addition to Google Colab, other online platforms like Paperspace, SageMaker Studio Lab also support Stable Diffusion Web UI Online. Docker images are also available for containerized deployment.

Q: What’s the process for installing Stable Diffusion Web UI Online on Windows 10/11 with NVidia-GPUs using the release package?

A: You can download the sd.webui.zip from v1.0.0-pre and extract its contents. Run update.bat and then run.bat. You can find more details in the ‘Install-and-Run-on-NVidia-GPUs’ section.

Q: What’s the process for installing Stable Diffusion UI Online on Linux?

A: On Linux, you can use a one-liner script that downloads and installs all the necessary components. For Debian-based systems, the command is bash <(wget -qO- https://raw.githubusercontent.com/AUTOMATIC1111/stable-diffusion-webui/master/webui.sh). Then you can run the UI with webui.sh.

Q: How can I manually install Stable Diffusion Web UI Online?

A: While manual installation is considered outdated and may not work, you can find the process in the ‘Manual Installation’ section. However, it’s recommended to use the automatic installation process for a smoother experience.

Q: What are the dependencies required to run Stable Diffusion Web UI Online?

A: The primary dependencies are Python 3.10.6 and Git. There are also optional dependencies like ESRGAN for upscaling. You can download and install these dependencies as per the guidelines provided in the ‘Required Dependencies’ section.

Q: Can I use Stable Diffusion Web UI Online on Windows 11’s WSL2?

A: Yes, there are specific instructions for installing Stable Diffusion Web UI Online on Windows 11’s WSL2. You can find these in the ‘Windows 11 WSL2 instructions’ section.

Q: Can I change the default configuration of the Stable Diffusion UI Online?

A: Yes, the Stable Diffusion Web UI Online allows for a wide range of customizations. You can adjust the default values of various UI elements, the noise settings, sampler eta values (noise multiplier), and many more parameters.