Mastering Prompts in Stable Diffusion

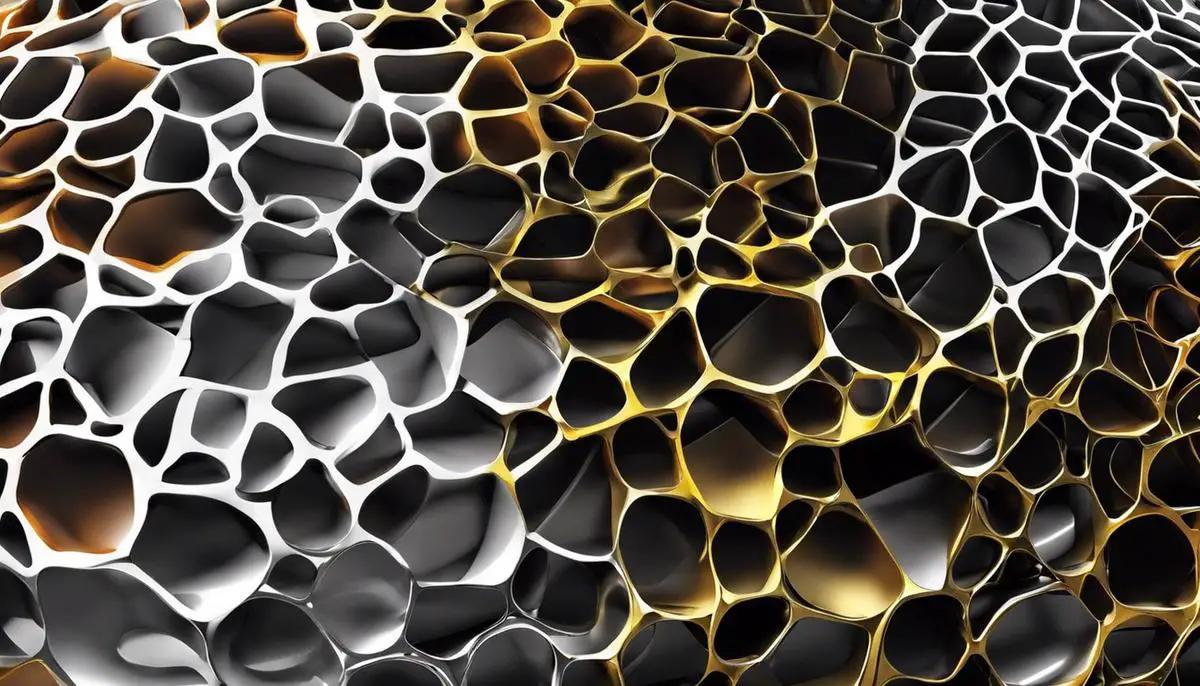

The advent of AI-driven image generation has ushered in a new era of content creation, epitomized by the emergence of text-to-image models like Stable Diffusion. At the heart of this technological marvel lies the concept of ‘prompts,’ the linguistic keys unlocking a myriad of visual possibilities. By understanding and mastering the intricacies of prompts, professionals … Read more